IT外包技能--生产高可用k8s实战(一)之手动部署笔记

原创IT外包技能--生产高可用k8s实战(一)之手动部署笔记

原创

手动部署K8s高可用集群,master高可用,apiserver高可用,etcd高可用。

anbile自动化部署高可用k8s集群请参考:/developer/article/2313622

通过手动部署一套后,可以更好的了解部署原理及相关配置。

软件版本信息:

只需查看,无序操作

Tip:k8s集群node信息

[root@k8s-master01 ~]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master01 Ready control-plane 39h v1.25.0 192.168.2.121 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.22

k8s-master02 Ready control-plane 39h v1.25.0 192.168.2.167 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.22

k8s-master03 Ready control-plane 39h v1.25.0 192.168.2.177 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.22

k8s-node01 Ready <none> 39h v1.25.0 192.168.2.190 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.22

k8s-node02 Ready <none> 39h v1.25.0 192.168.2.195 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.22

[root@k8s-master01 ~]#Tip: docker version

[root@k8s-master01 ~]# docker version

Client: Docker Engine - Community

Version: 24.0.5

API version: 1.43

Go version: go1.20.6

Git commit: ced0996

Built: Fri Jul 21 20:39:02 2023

OS/Arch: linux/amd64

Context: default

Server: Docker Engine - Community

Engine:

Version: 24.0.5

API version: 1.43 (minimum version 1.12)

Go version: go1.20.6

Git commit: a61e2b4

Built: Fri Jul 21 20:38:05 2023

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.6.22

GitCommit: 8165feabfdfe38c65b599c4993d227328c231fca

runc:

Version: 1.1.8

GitCommit: v1.1.8-0-g82f18fe

docker-init:

Version: 0.19.0

GitCommit: de40ad0

[root@k8s-master01 ~]#一、前置知识点

生产环境可部署Kubernetes集群的两种方式

目前生产部署Kubernetes集群主要有两种方式:

- kubeadm

kubeadm是一个k8s部署工具,提供kubeadm init 和 kubeadm join,用于快速部署kubernetes集群

- 二进制包

从github下载发行版的二进制包,手动部署每个组件,组建kubernetes集群。

这里采用kubeadm搭建集群。

kubeadm工具功能:

- kubeadm init:初始化一个Master节点

- kubeadm join:将工作节点加入集群

- kubeadm upgrade:升级k8s版本

- kubeadm token:管理kubeadm join使用的令牌

- kubeadm reset:清空kubeadm init或者kubeadm join对主机所做的任何改变

- kubeadm version:打印kubeadm版本

- kubeadm alpha:预览可用的新功能

- kubeadm config:配置操作

环境准备

服务器要求:

- 建议最小硬件配置:2个C/4G内存/30G硬盘

- 官网链接

软件环境:

名称 | 版本 |

|---|---|

系统 | CentOS Linux release 7.9.2009 (Core) |

容器 | docker-ce(Docker Engine - Community)24.0.5 |

kubernetes | 1.25.0 |

服务器整体规划:

角色 | IP | 其他单装组件 |

|---|---|---|

k8s-master01 | 192.168.2.121 | docker,etcd,nginx,keepalived |

k8s-master02 | 192.168.2.167 | docker,etcd,nginx,keepalived |

k8s-master03 | 192.168.2.177 | docker,nginx,keepalived |

k8s-node01 | 192.168.2.190 | docker,etcd |

k8s-node02 | 192.168.2.195 | docker |

负载均衡器对外IP | 192.168.2.88(VIP) |

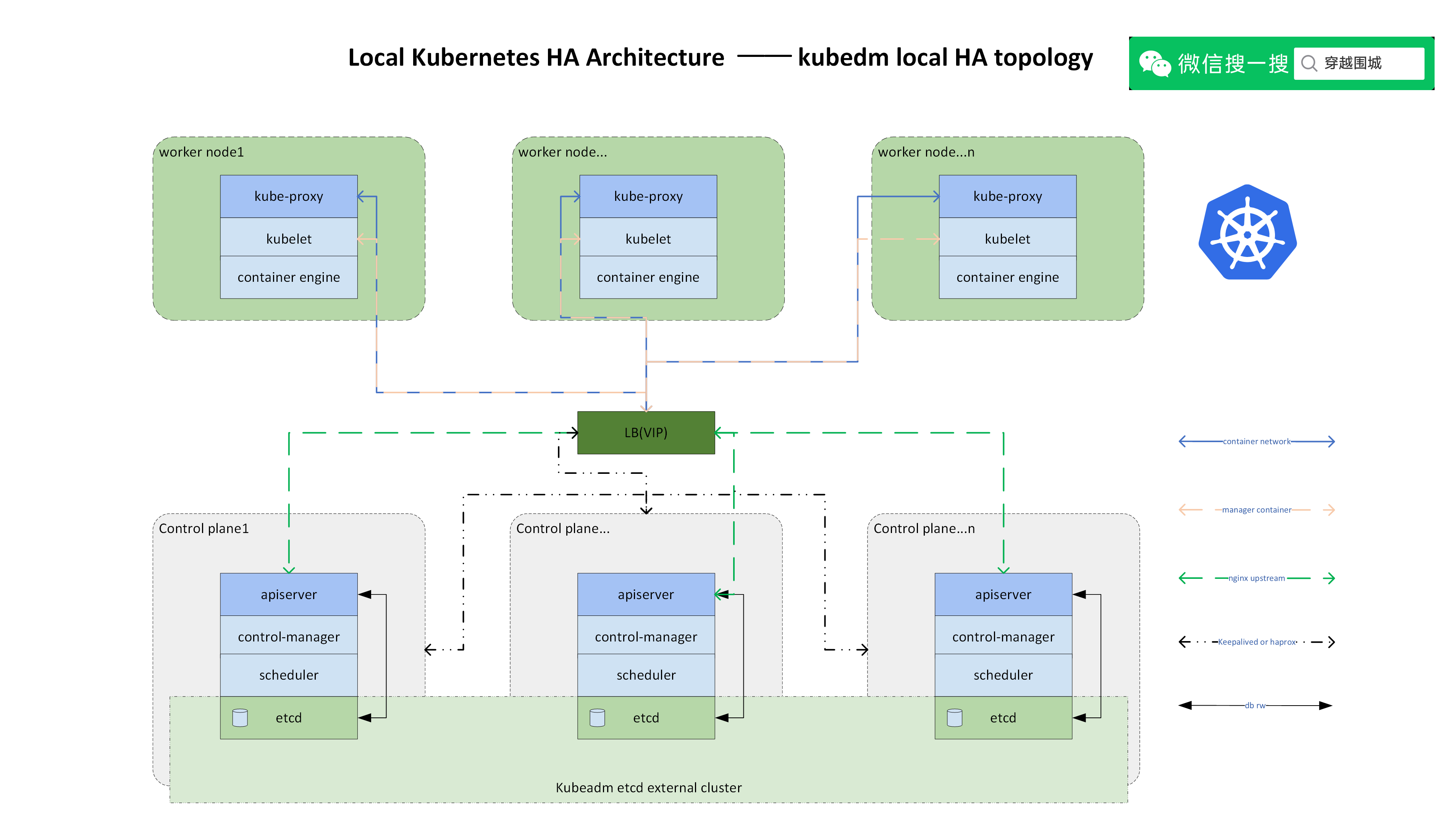

架构图:

操作系统初始化配置(所有环境相同操作)

关闭防火墙

systemctl stop firewalld && systemctl disable firewalld关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config && setenforce 0关闭swap

# 临时关闭

sed -ri 's/.*swap.*/#&/' /etc/fstab && swapoff -a根据规划设置主机名,根据规划IP依次:192.168.2.121 k8s-master01,192.168.2.167 k8s-master02,192.168.2.177 k8s-master03,192.168.2.190 k8s-node01,192.168.2.195 k8s-node02

hostnamectl set-hostname <要设置的hostname>

bash # 刷新bash,使主机名在当前连接中生效在master添加hosts

cat >> /etc/hosts << EOF

192.168.2.121 k8s-master01

192.168.2.167 k8s-master02

192.168.2.177 k8s-master03

192.168.2.190 k8s-node01

192.168.2.195 k8s-node02

EOF将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

# 生效

sysctl --system

sysctl -p

# 同步时间(使用ntp会出现(<invalid> ago),可以使用chrony)

# ntp同步时间方式(不推荐)

yum -y install ntpdate

ntpdate ntp.aliyun.com

# chrony同步时间方式(推荐)

sudo yum -y install chrony

timedatectl set-timezone Asia/Shanghai

timedatectl

systemctl restart chronyd

chronyc sources -v

date二、部署Nginx+keepalived高可用负载均衡器

kubernetes作为容器集群系统,通过健康检查+重启策略实现了Pod的故障自我修复能力,通过调度算法实现将Podcast分布式部署,并保持预期副本数,根据node失效状态自动在其他Node拉起对应的Pod,实现了应用层的高可用。

针对kubernetes集群,高可用性还应包括以下两个层面的考虑:ETCD数据库的高可用性和kubernetes Master组件的高可用性。而kubeadm搭建的k8s集群,etcd只启动一个,存在单点,所以搭建一套ETCD集群。

Master节点扮演着总控中心的角色,通过不断与工作节点上的kubelet和kube-proxy进行通信来维护整个集群的健康工作状态。如果Master节点故障,将无法使用kubectl工具或者API做任何集群管理。

Master节点主要有三个服务kube-apiserver\kube-controller-manager和kube-scheduler,其中kube-controller-manager和kube-scheduler组件自身通过选举机制已经实现高可用,所以master高可用主要针对kube-apiserever组件,而该组件是以HTTP API提供服务,因此对它高可用与web服务器类似,增加负载均衡器对其负载均衡即可,并且可水平扩容。

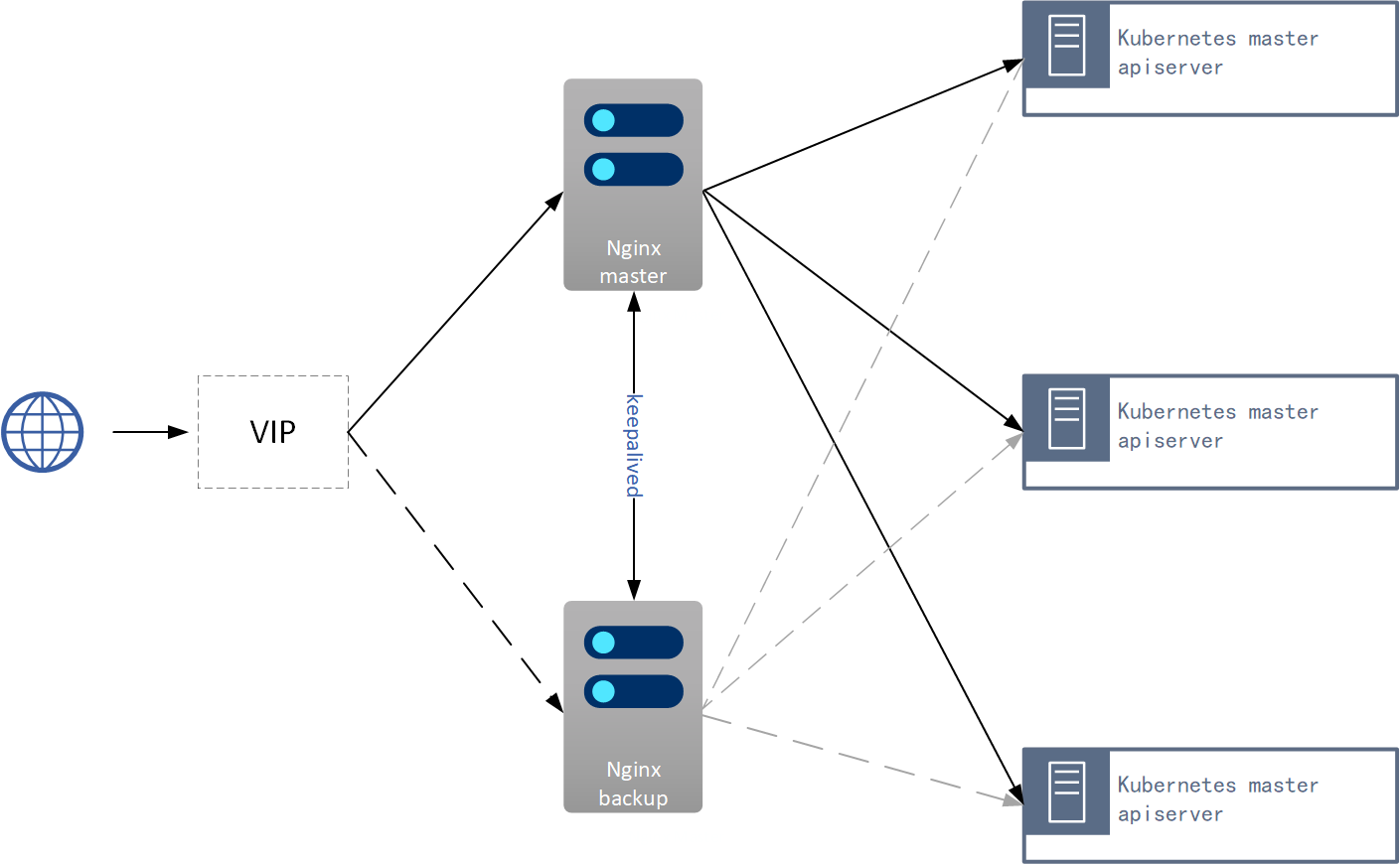

kube-apiserver高可用架构图:

- Nginx是一个主流web服务和反向代理服务,这里用四层实现对apiserver实现负载均衡。

- keepalived是一个主流高可用软件,基于VIP绑定实现服务器多机热备,在上述拓扑中,keepalived主要根据Nginx运行状态判断是否需要故障转移(偏移VIP),例如当Nginx主节点挂掉,VIP会自动绑定在Nginx备节点,从而保证VIP一直可用,实现Nginx高可用。

注:为了节省机器,这里与k8s master机器复用。也可以独立于k8s集群之外部署,只要nginx与apiserver能通信就行。

安装软件包

- 操作机器:master高可用机器上进行操作,也就是上述中的k8s-master01,k8s-master03,k8s-master03

yum -y install epel-release yum -y install nginx keepalived nginx-mod-stream

Nginx配置文件

- 操作机器:master高可用机器上进行操作,也就是上述中的k8s-master01,k8s-master03,k8s-master03

cat > /etc/nginx/nginx.conf << EOF

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

# 四层负载均衡,为两台Master apiserver组件提供负载均衡

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.2.121:6443; # Master1 APISERVER IP:PORT

server 192.168.2.167:6443; # Master2 APISERVER IP:PORT

}

server {

listen 16443; # 由于nginx与master节点复用,这个监听端口不能是6443,否则会冲突

proxy_pass k8s-apiserver;

}

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

keepalive_timeout 65;

types_hash_max_size 2048;

#gzip on;

include /etc/nginx/conf.d/*.conf;

include /etc/nginx/mime.types;

default_type application/octet-stream;

}

EOFnginx错误

[root@k8s-master01 ~]# systemctl status nginx.service

● nginx.service - The nginx HTTP and reverse proxy server

Loaded: loaded (/usr/lib/systemd/system/nginx.service; disabled; vendor preset: disabled)

Active: failed (Result: exit-code) since Tue 2023-08-01 10:18:55 CST; 10s ago

Process: 9676 ExecStart=/usr/sbin/nginx (code=exited, status=0/SUCCESS)

Process: 10019 ExecStartPre=/usr/sbin/nginx -t (code=exited, status=1/FAILURE)

Process: 10016 ExecStartPre=/usr/bin/rm -f /run/nginx.pid (code=exited, status=0/SUCCESS)

Main PID: 9678 (code=exited, status=0/SUCCESS)

Aug 01 10:18:55 k8s-master01 systemd[1]: Starting The nginx HTTP and reverse proxy server...

Aug 01 10:18:55 k8s-master01 nginx[10019]: nginx: [emerg] unknown directive "stream" in /etc/nginx/nginx.conf:11

Aug 01 10:18:55 k8s-master01 nginx[10019]: nginx: configuration file /etc/nginx/nginx.conf test failed

Aug 01 10:18:55 k8s-master01 systemd[1]: nginx.service: control process exited, code=exited status=1

Aug 01 10:18:55 k8s-master01 systemd[1]: Failed to start The nginx HTTP and reverse proxy server.

Aug 01 10:18:55 k8s-master01 systemd[1]: Unit nginx.service entered failed state.

Aug 01 10:18:55 k8s-master01 systemd[1]: nginx.service failed.

[root@k8s-master01 ~]# 查找stream模块

[root@k8s-master01 ~]# yum search stream |grep nginx

nginx-mod-stream.x86_64 : Nginx stream modules

[root@k8s-master01 ~]#安装模块

yum -y install nginx-mod-streamkeepalived配置文件(Nginx Master)

- 操作机器:master高可用机器上进行操作,也就是上述中的k8s-master01

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh" # 判断返回状态码

}

vrrp_instance VI_1 {

state MASTER

interface ens33 # 修改为实际网卡名称

virtual_router_id 51 # VRRP路由ID实例,每个实例时唯一的

priority 100 # 指定VRRP心跳包通告时间,默认为1秒

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟IP

virtual_ipaddress {

192.168.2.88/24

}

track_script {

check_nginx

}

}

EOF- vrrp_script:指定检查nginx工作状态脚本(根据nginx状态判断是否故障转移)

- virtual_ipaddress:虚拟IP(VIP)

- track_script:跟踪名称

准备上述配置文件中检查nginx运行状态的脚本:

cat > /etc/keepalived/check_nginx.sh << "EOF"

#!/bin/bash

code=$(curl -k https://127.0.0.1:16443/version -s -o /dev/null -w %{http_code})

if [ "$code" -n 200 ];then

exit 1

else

exit 0

fi

EOFchmod +x /etc/keepalived/check_nginx.shsystemctl daemon-reload

systemctl start nginx && systemctl enable nginx

systemctl start keepalived && systemctl enable keepalivedkeepalived配置文件

- 操作机器:master高可用机器上进行操作,也就是上述中的k8s-master03,k8s-master03

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP # 修改备服务名

interface ens33

virtual_router_id 51 # VRRP路由ID实例,每个实例时唯一的

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟IP

virtual_ipaddress {

192.168.2.88/24

}

track_script {

check_nginx

}

}

EOF准备上述配置文件中检查nginx运行状态的脚本:

cat > /etc/keepalived/check_nginx.sh << "EOF"

#!/bin/bash

code=$(curl -k https://127.0.0.1:16443/version -s -o /dev/null -w %{http_code})

if [ "$code" -n 200 ];then

exit 1

else

exit 0

fi

EOFchmod +x /etc/keepalived/check_nginx.sh[root@k8s-master01 keepalived]# curl -k https://127.0.0.1:16443/version -s -o /dev/null -w %{http_code}

000[root@k8s-master01 keepalived]#

[root@k8s-master01 keepalived]#注:keepalived根据脚本返回状态码(0为工作正常,非0工作不正常)判断是否故障转移

启动并设置开机启动

- 操作机器:master高可用机器上进行操作,也就是上述中的k8s-master01,k8s-master03,k8s-master03

systemctl daemon-reload && systemctl start nginx keepalived && systemctl enable nginx keepalived查看keepalived工作状态

[root@k8s-master01 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:50:56:27:30:f6 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.121/24 brd 192.168.2.255 scope global noprefixroute dynamic ens33

valid_lft 24069sec preferred_lft 24069sec

inet 192.168.2.88/24 scope global secondary ens33

valid_lft forever preferred_lft forever

[root@k8s-master01 ~]#可以看到,在ens33网卡绑定了192.168.2.88虚拟IP,说明工作正常。

nginx+keepalived高可用测试

- 关闭主节点nginx,测试VIP是否漂移到备节点服务器。

- 在nginx master执行pkill nginx

- 在nginx backup,ip addr命令查看已成功绑定VIP

三、部署ETCD集群

ETCD时一个分布式键值存储应用,kubernetes使用ETCD进行数据存储,kubeadm搭建默认情况下只启动一个ETCD Pod,存在单点故障,生产环境强烈不建议所以我们这里使用3台服务器组件集群,可容忍1台机器故障,当然,你也可以使用5台组件ETCD集群,可容忍2台机器故障。

注:测试环境与k8s节点机器复用; ETCD生成环境外部集群

推荐独立于k8s集群之外的机器部署 尽量与k8s的node节点同网段,且能与apiserver能通信(也可使用不同网段)

节点名称 | IP地址 | 主机名 |

|---|---|---|

etcd-1 | 192.168.2.121 | k8s-master01 |

etcd-2 | 192.168.2.167 | k8s-master02 |

etcd-3 | 192.168.2.190 | k8s-node01 |

准备cfssl证书生成工具

cfssl是一个开源的证书管理工具,使用json文件生成证书,相比openssl更方便。

找任意一台服务器操作,这里用master节点。

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

cp cfssl_linux-amd64 /usr/local/bin/cfssl

cp cfssljson_linux-amd64 /usr/local/bin/cfssljson

cp cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo生成ETCD证书

自签证书颁发机构(CA)

创建工作目录

mkdir -pv ~/tls/{k8s,etcd}

cd ~/tls/etcd自签CA配置文件:

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json << EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF生成证书:

会生成ca.pem和ca-key.pem文件

[root@k8s-master02 etcd]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

2023/08/01 11:21:04 [INFO] generating a new CA key and certificate from CSR

2023/08/01 11:21:04 [INFO] generate received request

2023/08/01 11:21:04 [INFO] received CSR

2023/08/01 11:21:04 [INFO] generating key: rsa-2048

2023/08/01 11:21:05 [INFO] encoded CSR

2023/08/01 11:21:05 [INFO] signed certificate with serial number 301134860050526561330736851269669790962082128129

[root@k8s-master02 etcd]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

[root@k8s-master02 etcd]#使用自签CA签发Etcd HTTPS证书

创建证书申请文件:

注:上述文件hosts字段中IP为所有etcd节点的集群内部通信IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

cat > server-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"192.168.2.121",

"192.168.2.167",

"192.168.2.190"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF生成证书:

会生成server.pem和server-key.pem文件

[root@k8s-master02 etcd]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

2023/08/01 11:24:57 [INFO] generate received request

2023/08/01 11:24:57 [INFO] received CSR

2023/08/01 11:24:57 [INFO] generating key: rsa-2048

2023/08/01 11:24:57 [INFO] encoded CSR

2023/08/01 11:24:57 [INFO] signed certificate with serial number 5650728843620614547086206124547476807529703940

2023/08/01 11:24:57 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-master02 etcd]#部署ETCD

ETCD从Github下载二进制文件

下载地址:https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz

部署ETCD

例如:在节点1上操作,为简化操作,待会将节点1生成的所有文件拷贝到节点2和节点3.

- 创建工作目录并解压二进制包

mkdir /opt/etcd/{bin,cfg,ssl} -p

tar zxvf etcd-v3.4.9-linux-amd64.tar.gz

mv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/- 创建etcd配置文件

cat > /opt/etcd/cfg/etcd.conf << EOF

#[Member]

ETCD_NAME="etcd-2"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.2.167:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.2.167:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.2.167:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.2.167:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.2.121:2380,etcd-2=https://192.168.2.167:2380,etcd-3=https://192.168.2.190:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF? ETCD_NAME:节点名称,集群中唯一

? ETCD_DATA_DIR:数据目录

? ETCD_LISTEN_PEER_URLS:集群通信监听地址

? ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

? ETCD_INITIAL_ADVERTISE_PEERURLS:集群通告地址

? ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

? ETCD_INITIAL_CLUSTER:集群节点地址

? ETCD_INITIALCLUSTER_TOKEN:集群Token

? ETCD_INITIALCLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群

- systemd管理etcd

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd.conf

ExecStart=/opt/etcd/bin/etcd \

--cert-file=/opt/etcd/ssl/server.pem \

--key-file=/opt/etcd/ssl/server-key.pem \

--peer-cert-file=/opt/etcd/ssl/server.pem \

--peer-key-file=/opt/etcd/ssl/server-key.pem \

--trusted-ca-file=/opt/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \

--logger=zap

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF- 拷贝刚才生成的证书 把刚才生成的证书拷贝到配置文件中的路径

cp ~/tls/etcd/ca*pem ~/tls/etcd/server*pem /opt/etcd/ssl/- 将上面节点1所有生成的文件拷贝到节点2和节点3

scp -r /opt/etcd/ root@192.168.2.121:/opt/ scp /usr/lib/systemd/system/etcd.service root@192.168.2.121:/usr/lib/systemd/system/ scp -r /opt/etcd/ root@192.168.2.190:/opt/ scp /usr/lib/systemd/system/etcd.service root@192.168.2.190:/usr/lib/systemd/system/- 修改etcd名称,启动并设置开机启动?developer/article/2312651/undefined然后在节点2和节点3分别修改etcd.conf配置文件中的节点名称和当前服务器IP

vi /opt/etcd/cfg/etcd.conf

#[Member]

ETCD_NAME="etcd-2"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.2.167:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.2.167:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.2.167:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.2.167:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.2.121:2380,etcd-2=https://192.168.2.167:2380,etcd-3=https://192.168.2.190:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"最后启动etcd并设置开机启动

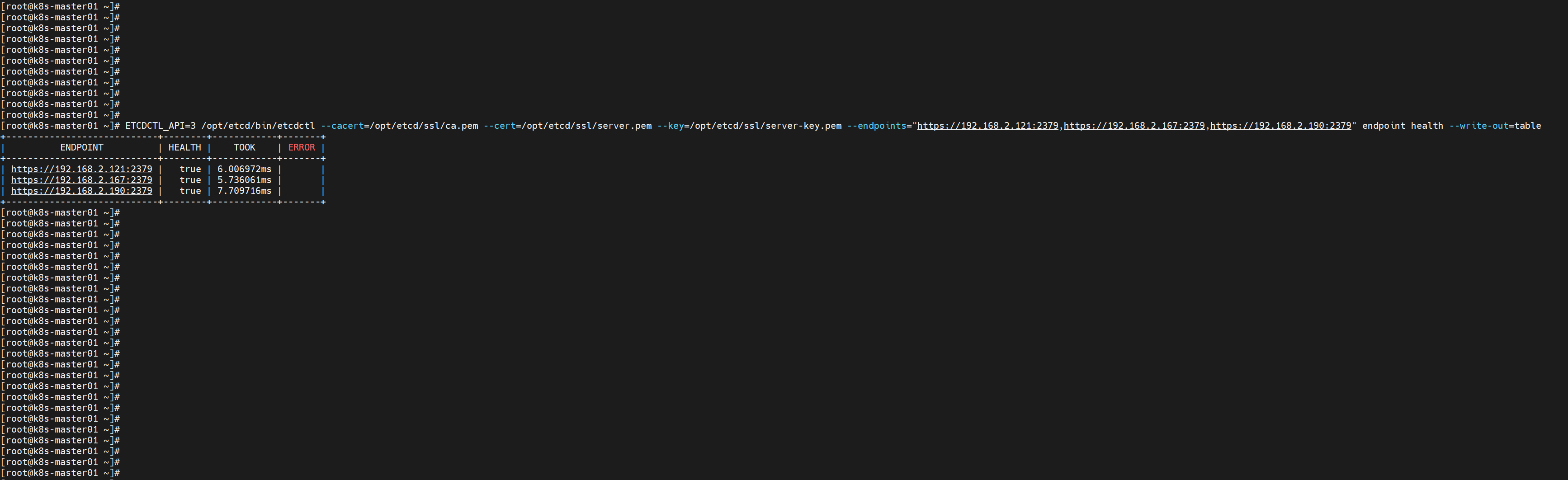

systemctl daemon-reload && systemctl start etcd && systemctl enable etcd- 查看集群状态 检查命令

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.2.121:2379,https://192.168.2.167:2379,https://192.168.2.190:2379" endpoint health --write-out=table检查结果

如果输出下面信息,就说明集群部署成功。

如果有问题第一步先看日志:/var/log/message 或 journalctl -u etcd

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.2.121:2379,https://192.168.2.167:2379,https://192.168.2.190:2379" endpoint health --write-out=table

四、安装Docker/kubeadm/kubectl/kubelet

配置源

- 操作机器:k8s集群所有机器上进行操作,也就是上述中的:k8s-master01,k8s-master02,k8s-master03,k8s-node01,k8s-node02

docker官方源配置

sudo yum install -y yum-utils

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo安装docker-ce

sudo yum install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin 启动docker

systemctl daemon-reload && systemctl enable docker && systemctl start docker配置kubernetes源

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

# Set SELinux in permissive mode (effectively disabling it)

# 上面已经操作sudo setenforce 0 && sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

# 通过下面命令指定版本安装sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

# sudo systemctl enable --now kubelet安装kubeadm/kubelet/kubectl

sudo yum install -y kubelet-1.25.0 kubeadm-1.25.0 kubectl-1.25.0 --disableexcludes=kubernetes

# 安装完成后,设置kubelet开机启动

sudo systemctl enable --now kubelet五、部署Kubernetes Master

初始化Master1

操作机器: k8s-master01

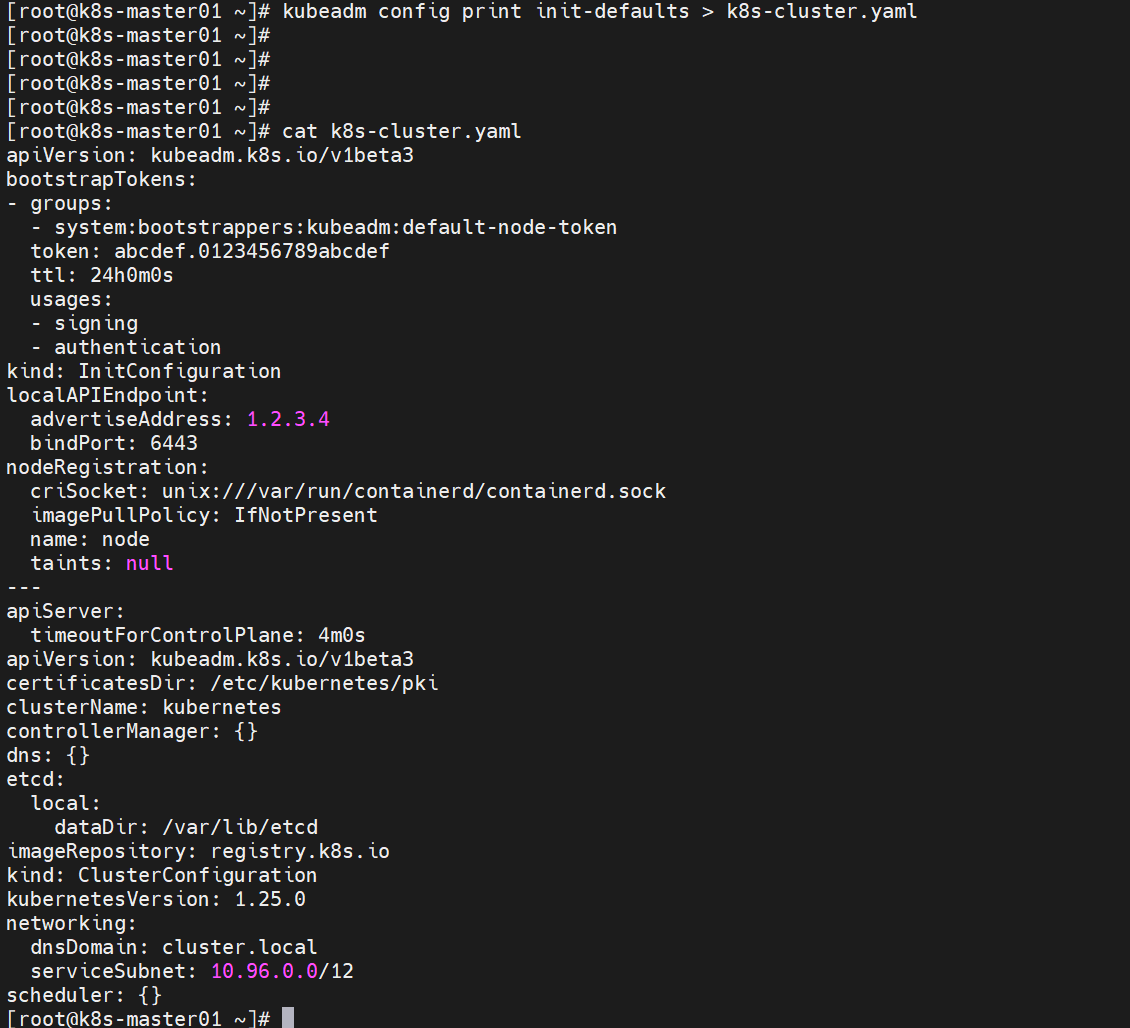

通过命令获取kubeadm-config.yaml配置

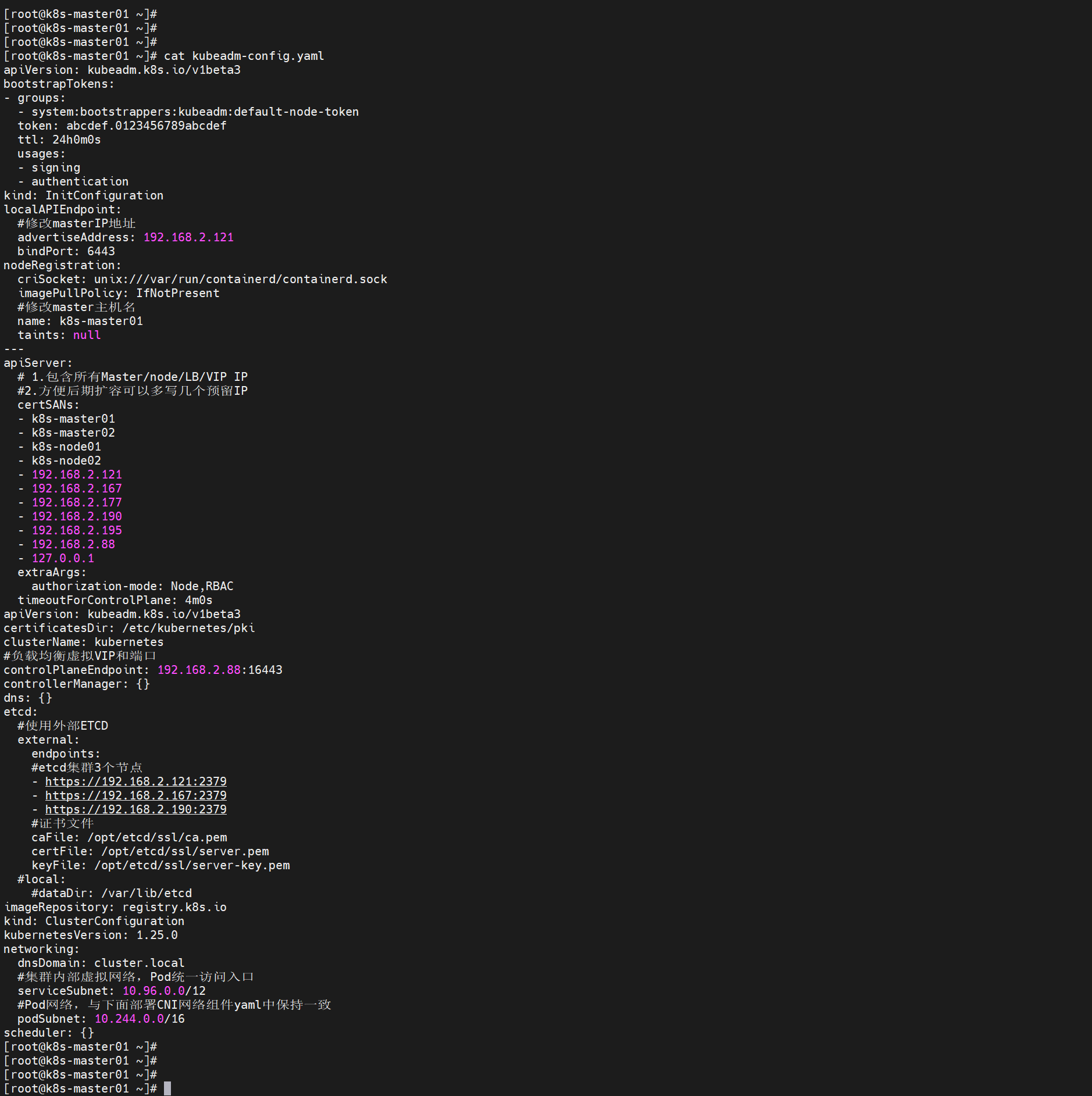

kubeadm config print init-defaults > kubeadm-config.yaml修改以下配置

- advertiseAddress:k8s-master01的IP地址

- name:k8s-master01的主机名

- 在clusterName下增加:controlPlaneEndpoint: 192.168.2.88:16443 #负载均衡虚拟VIP和端口

clusterName: kubernetes

controlPlaneEndpoint: 192.168.2.88:16443 - 在networking.dnsDomain下增加:serviceSubnet: 10.96.0.0/12

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12 #集群内部虚拟网络,Pod统一访问入口

podSubnet: 10.244.0.0/16 #Pod网络,与下面部署CNI网络组件yaml中保持一致- 注释掉etcd两行: #local: #dataDir: /var/lib/etcd

etcd:

#local:

#dataDir: /var/lib/etcd- 在etc下增加行:external: external: #使用外部ETCD endpoints: - https://192.168.2.121:2379 #etcd集群3个节点 - https://192.168.2.167:2379 #etcd集群3个节点 - https://192.168.2.190:2379 #etcd集群3个节点 caFile: /opt/etcd/ssl/ca.pem #证书文件 certFile: /opt/etcd/ssl/server.pem keyFile: /opt/etcd/ssl/server-key.pem

- apiServer下增加行:certSANs

apiServer:

certSANs:

- k8s-master01

- k8s-master02

- k8s-master03

- k8s-node01

- k8s-node02

- 192.168.2.121

- 192.168.2.167

- 192.168.2.177

- 192.168.2.190

- 192.168.2.195

- 192.168.2.88

- 127.0.0.1以下为参考配置

初始化第一台Master

tip: 初始化命令

kubeadm init --config kubeadm-config.yaml初始化报错

[root@k8s-master01 ~]# kubeadm init --config kubeadm-config.yaml

[init] Using Kubernetes version: v1.25.0

[preflight] Running pre-flight checks

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR CRI]: container runtime is not running: output: E0801 05:27:02.270428 10900 remote_runtime.go:616] "Status from runtime service failed" err="rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial unix /var/run/containerd/containerd.sock: connect: no such file or directory\""

time="2023-08-01T05:27:02+08:00" level=fatal msg="getting status of runtime: rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial unix /var/run/containerd/containerd.sock: connect: no such file or directory\""

, error: exit status 1

[ERROR FileContent--proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

[root@k8s-master01 ~]# 错误1

WARNING Service-Kubelet: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

修复命令

[root@k8s-master01 ~]# systemctl enable kubelet.service错误2

ERROR FileContent--proc-sys-net-ipv4-ip_forward: /proc/sys/net/ipv4/ip_forward contents are not set to 1

修复操作:

cat > /etc/sysctl.d/kubernetes.conf <<EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

EOF

sysctl -p /etc/sysctl.d/kubernetes.conf错误3

ERROR CRI: container runtime is not running: output: E0801 05:30:20.549072 10966 remote_runtime.go:616] "Status from runtime service failed" err="rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial unix /var/run/containerd/containerd.sock: connect: no such file or directory""

修复操作

[root@k8s-master01 ~]# mv /etc/containerd/config.toml /etc/containerd/config.toml.rpm

[root@k8s-master01 ~]# systemctl restart containerd错误4

[root@k8s-master01 ~]# kubeadm init --config kubeadm-config.yaml

[init] Using Kubernetes version: v1.25.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master01 k8s-master02 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.2.121 192.168.2.88 192.168.2.167 192.168.2.190 127.0.0.1]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] External etcd mode: Skipping etcd/ca certificate authority generation

[certs] External etcd mode: Skipping etcd/server certificate generation

[certs] External etcd mode: Skipping etcd/peer certificate generation

[certs] External etcd mode: Skipping etcd/healthcheck-client certificate generation

[certs] External etcd mode: Skipping apiserver-etcd-client certificate generation

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

W0801 05:36:13.610645 11004 endpoint.go:57] [endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "admin.conf" kubeconfig file

W0801 05:36:13.676947 11004 endpoint.go:57] [endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "kubelet.conf" kubeconfig file

W0801 05:36:13.745630 11004 endpoint.go:57] [endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

W0801 05:36:13.778474 11004 endpoint.go:57] [endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all running Kubernetes containers by using crictl:

- 'crictl --runtime-endpoint unix:///var/run/containerd/containerd.sock ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'crictl --runtime-endpoint unix:///var/run/containerd/containerd.sock logs CONTAINERID'

error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster

To see the stack trace of this error execute with --v=5 or higher

[root@k8s-master01 ~]#[root@k8s-master01 ~]# crictl --runtime-endpoint unix:///var/run/containerd/containerd.sock ps -a | grep kube | grep -v pause

0fa7b5efdec0f 1a54c86c03a67 11 minutes ago Running kube-controller-manager 0 054bdedbccb64 kube-controller-manager-k8s-master01

5ac88000d9924 bef2cf3115095 11 minutes ago Running kube-scheduler 0 7ec15a13760cd kube-scheduler-k8s-master01

d2d37f716454f 4d2edfd10d3e3 11 minutes ago Running kube-apiserver 0 9eafc487b0f54 kube-apiserver-k8s-master01

[root@k8s-master01 ~]#修复命令

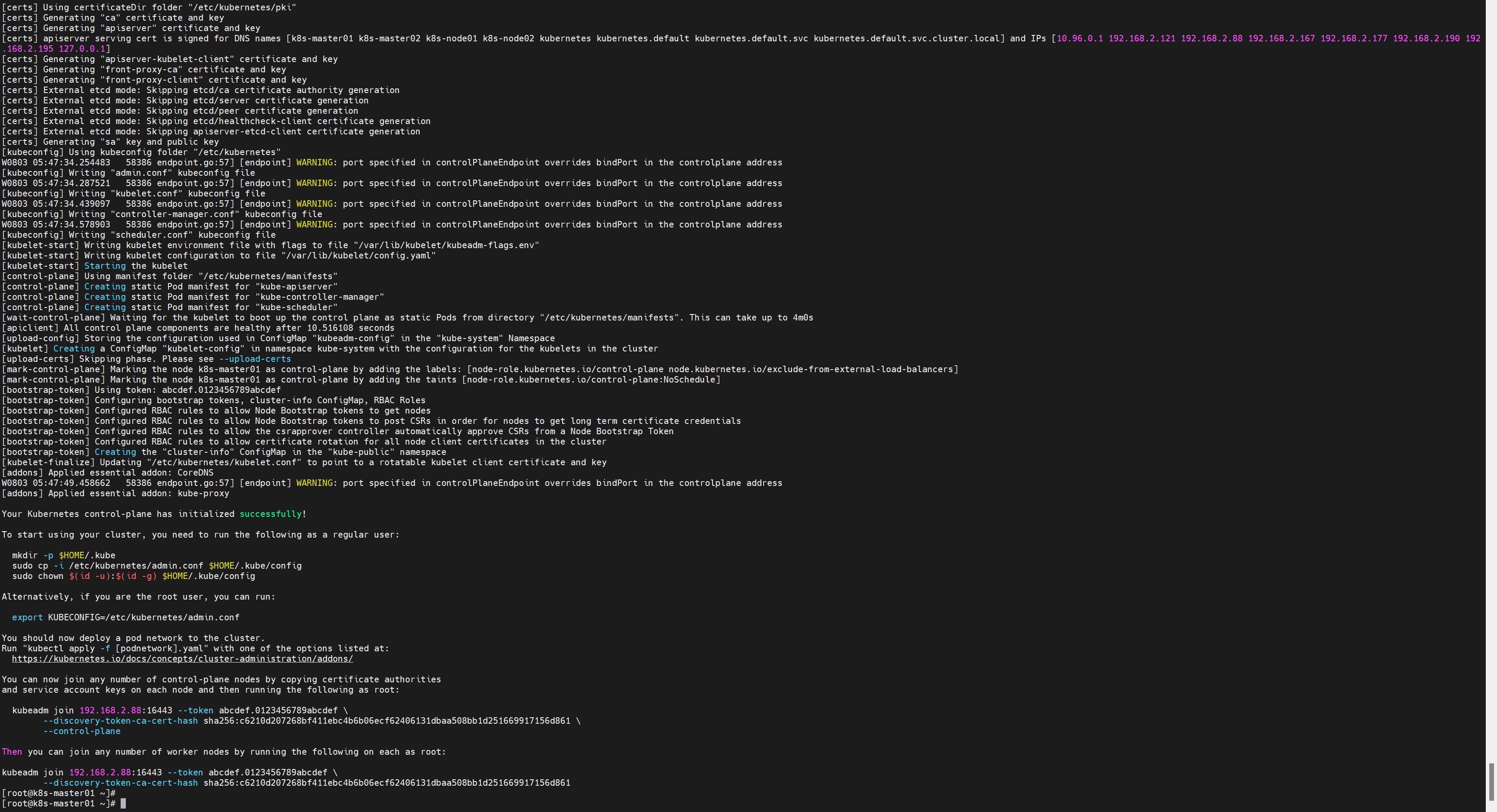

systemctl restart kubelet && systemctl enable kubelet正常部署

初始化完成后,会有两个join的命令

- 带有--control-plane是用于加入组建多master集群的

- 不带的是加入node节点的。

[root@k8s-master01 ~]#

[root@k8s-master01 ~]# kubeadm init --config kubeadm-config.yaml

[init] Using Kubernetes version: v1.25.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master01 k8s-master02 k8s-node01 k8s-node02 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.2.121 192.168.2.88 192.168.2.167 192.168.2.177 192.168.2.190 192.168.2.195 127.0.0.1]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] External etcd mode: Skipping etcd/ca certificate authority generation

[certs] External etcd mode: Skipping etcd/server certificate generation

[certs] External etcd mode: Skipping etcd/peer certificate generation

[certs] External etcd mode: Skipping etcd/healthcheck-client certificate generation

[certs] External etcd mode: Skipping apiserver-etcd-client certificate generation

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

W0803 05:47:34.254483 58386 endpoint.go:57] [endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "admin.conf" kubeconfig file

W0803 05:47:34.287521 58386 endpoint.go:57] [endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "kubelet.conf" kubeconfig file

W0803 05:47:34.439097 58386 endpoint.go:57] [endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

W0803 05:47:34.578903 58386 endpoint.go:57] [endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 10.516108 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

W0803 05:47:49.458662 58386 endpoint.go:57] [endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 192.168.2.88:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:c6210d207268bf411ebc4b6b06ecf62406131dbaa508bb1d251669917156d861 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.2.88:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:c6210d207268bf411ebc4b6b06ecf62406131dbaa508bb1d251669917156d861

[root@k8s-master01 ~]#拷贝kubectl使用的连接k8s认证文件默认路径:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane 6m34s v1.25.0

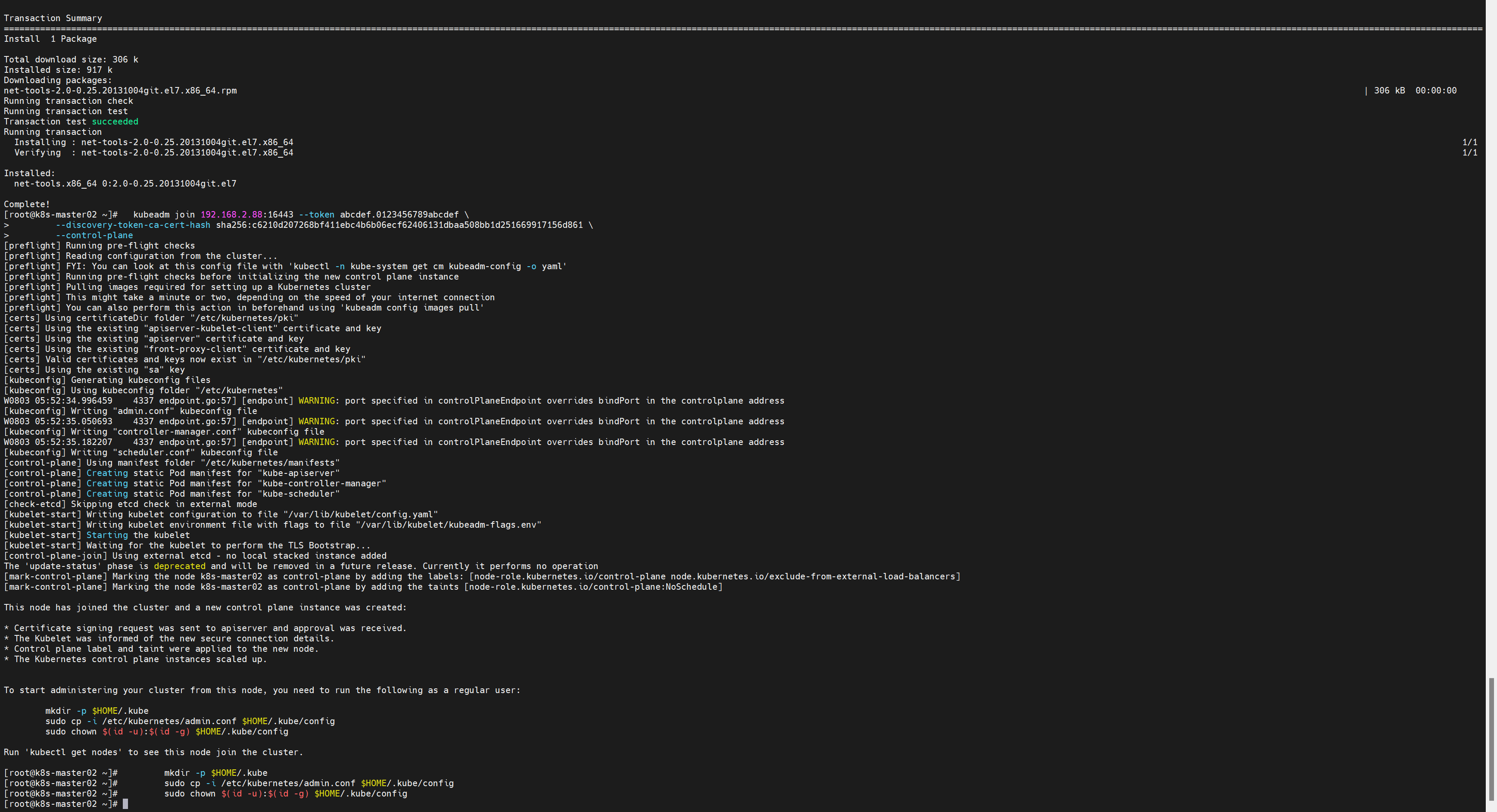

[root@k8s-master01 ~]#初始化第二、三台master(或者更多)

- 操作机器:k8s-master01

将Master1节点生成的证书拷贝到Master2及其他master节点上(也就是从k8s-master01拷贝pki到k8s-master02,k8s-master02上)

scp -r /etc/kubernetes/pki/ 192.168.2.167:/etc/kubernetes/

scp -r /etc/kubernetes/pki/ 192.168.2.177:/etc/kubernetes/[root@k8s-master01 ~]# scp -r /etc/kubernetes/pki/ 192.168.2.167:/etc/kubernetes/

The authenticity of host '192.168.2.167 (192.168.2.167)' can't be established.

ECDSA key fingerprint is SHA256:aOtBIt3Aw8fKBrIR8V0mm12vCj8zBj4Yn5M8x//Aghg.

ECDSA key fingerprint is MD5:0f:cc:4a:9e:8c:e0:8f:83:06:71:10:59:76:c8:74:e2.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.2.167' (ECDSA) to the list of known hosts.

root@192.168.2.167's password:

ca.key 100% 1675 2.9MB/s 00:00

ca.crt 100% 1099 2.7MB/s 00:00

apiserver.key 100% 1675 3.6MB/s 00:00

apiserver.crt 100% 1346 3.7MB/s 00:00

apiserver-kubelet-client.key 100% 1675 3.9MB/s 00:00

apiserver-kubelet-client.crt 100% 1164 2.3MB/s 00:00

front-proxy-ca.key 100% 1679 3.2MB/s 00:00

front-proxy-ca.crt 100% 1115 2.6MB/s 00:00

front-proxy-client.key 100% 1675 3.9MB/s 00:00

front-proxy-client.crt 100% 1119 2.9MB/s 00:00

sa.key 100% 1675 129.8KB/s 00:00

sa.pub 100% 451 739.4KB/s 00:00

[root@k8s-master01 ~]#复制加入Master join命令在其他Master上执行:

kubeadm join 192.168.2.88:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:c6210d207268bf411ebc4b6b06ecf62406131dbaa508bb1d251669917156d861 \

--control-plane

注:由于网络插件还没有部署,还没有准备就绪NotReady

[root@k8s-master02 etcd]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane 15m v1.25.0

k8s-master02 NotReady control-plane 2m37s v1.25.0

k8s-master03 NotReady control-plane 2m37s v1.25.0

k8s-node01 NotReady <none> 101s v1.25.0

k8s-node02 NotReady <none> 101s v1.25.0

[root@k8s-master02 etcd]# 测试负载均衡

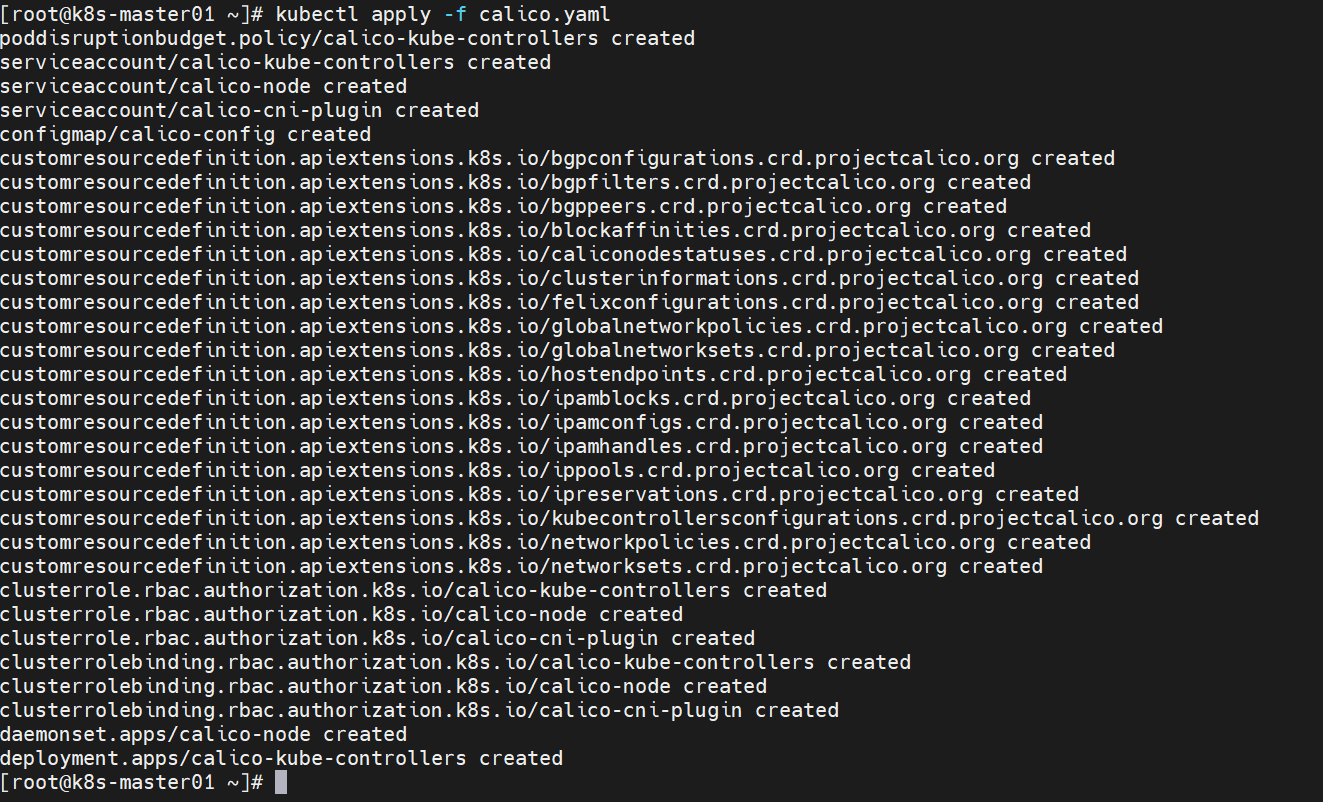

curl -k https://192.168.2.88:16443/version -s -o /dev/null -w %{http_code}部署calico

[root@k8s-master01 ~]# kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

serviceaccount/calico-cni-plugin created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrole.rbac.authorization.k8s.io/calico-cni-plugin created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

[root@k8s-master01 ~]#[root@k8s-master01 ~]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master01 Ready control-plane 116m v1.25.0 192.168.2.121 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.22

k8s-master02 Ready control-plane 103m v1.25.0 192.168.2.167 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.22

k8s-master03 Ready control-plane 103m v1.25.0 192.168.2.177 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.22

k8s-node01 Ready <none> 102m v1.25.0 192.168.2.190 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.22

k8s-node02 Ready <none> 102m v1.25.0 192.168.2.195 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.22

[root@k8s-master01 ~]#六、加入kubernetes Node

- 操作机器:k8s-node01,k8s-node02

- 复制kubeadm join node的命令

复制部署master1成功后,加入node节点的命令到k8s-node01,k8s-node02执行即可。

kubeadm join 192.168.2.88:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:c6210d207268bf411ebc4b6b06ecf62406131dbaa508bb1d251669917156d861

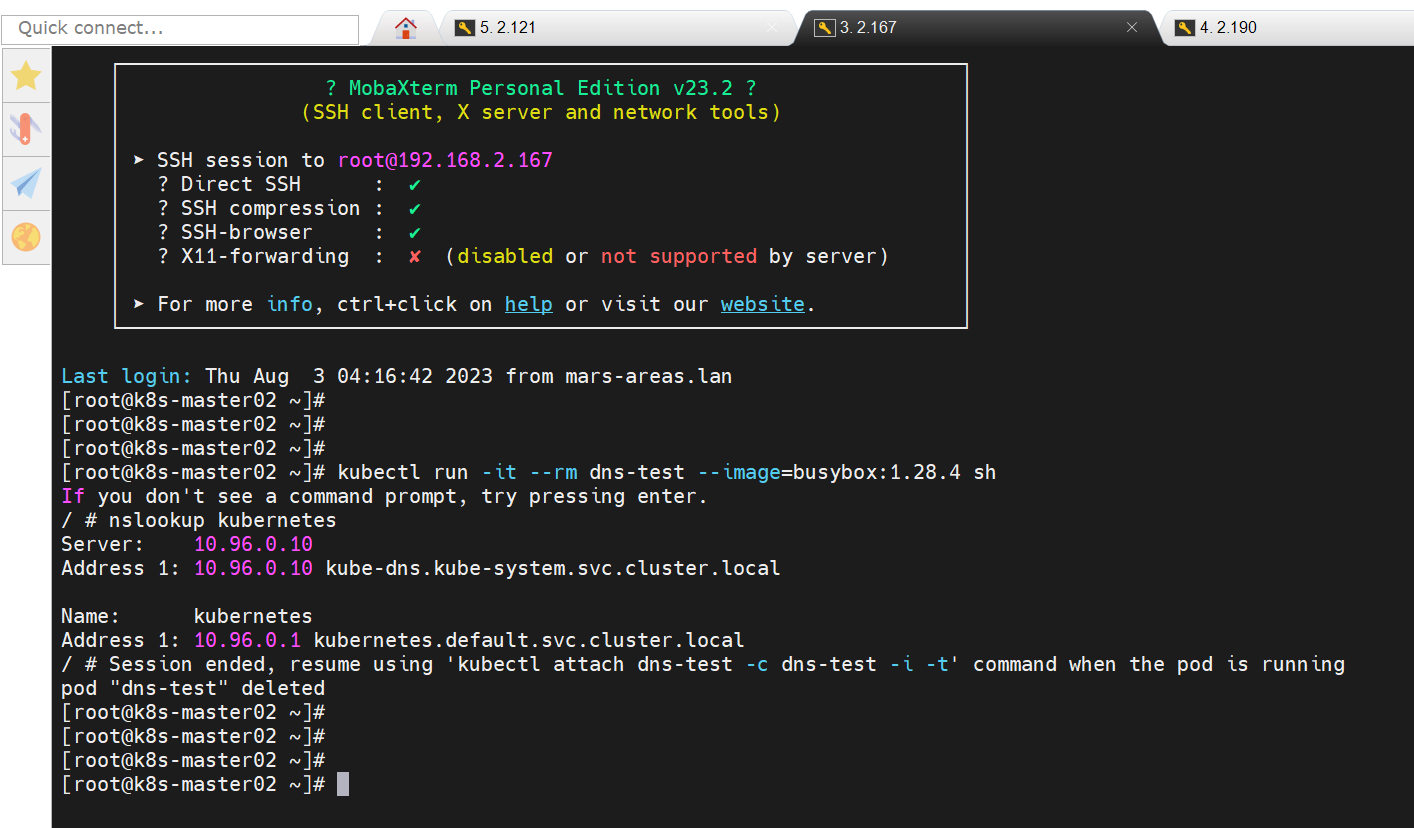

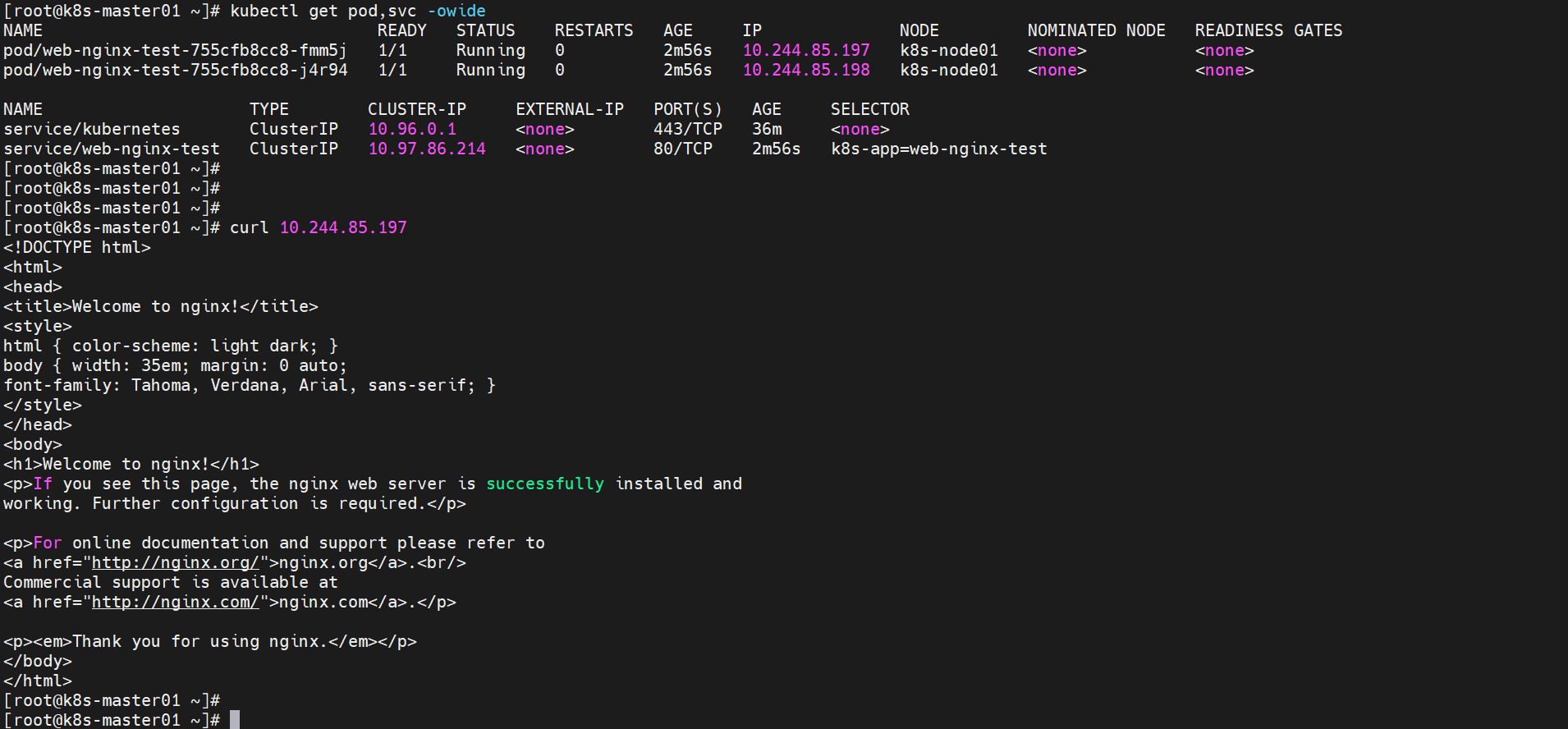

七、测试k8s集群

Coredns测试

[root@k8s-master01 ~]# kubectl run -it --rm dns-test --image=busybox:1.28.4 sh

If you don't see a command prompt, try pressing enter.

/ # nslookup kubernetes

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

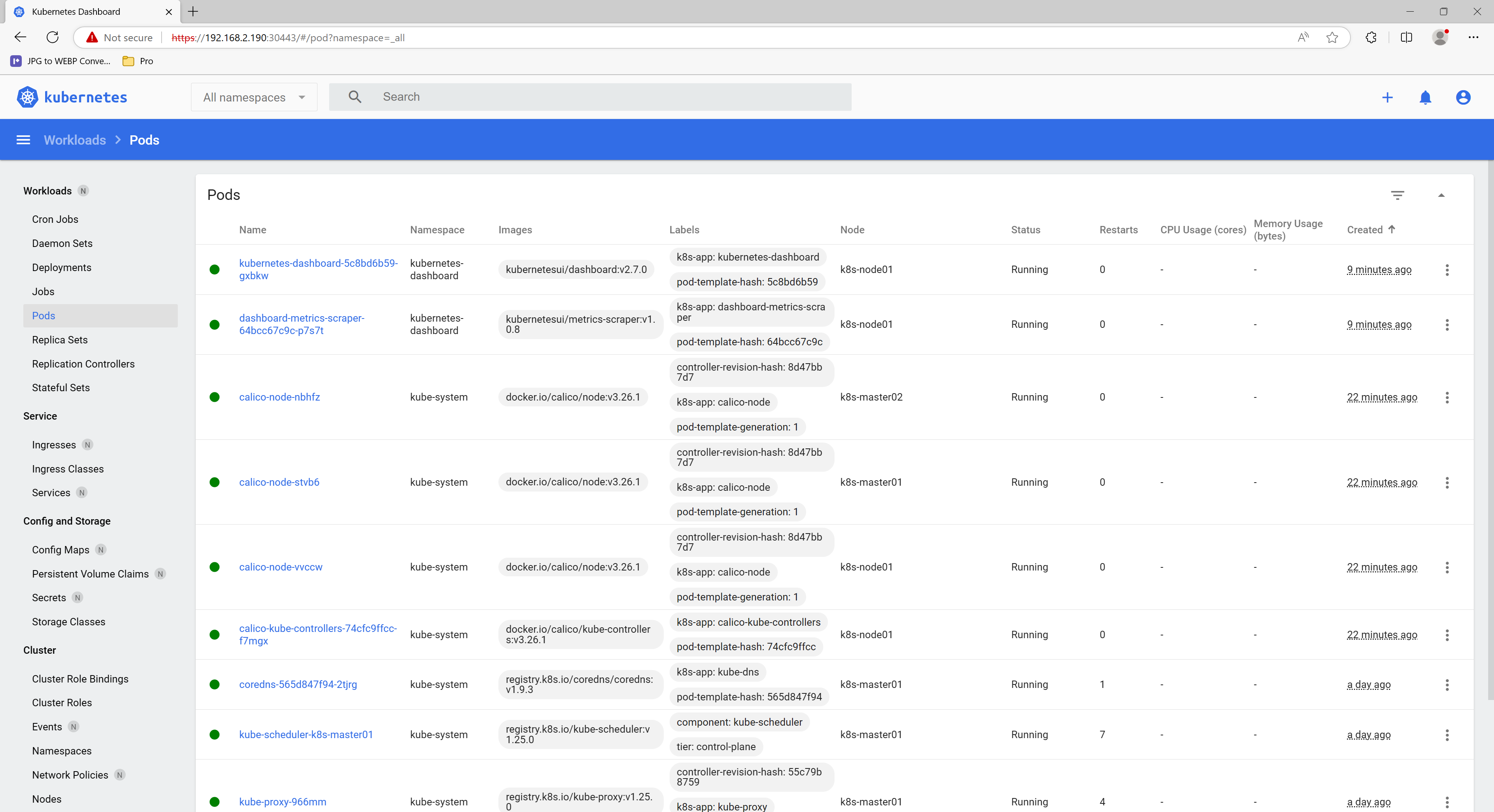

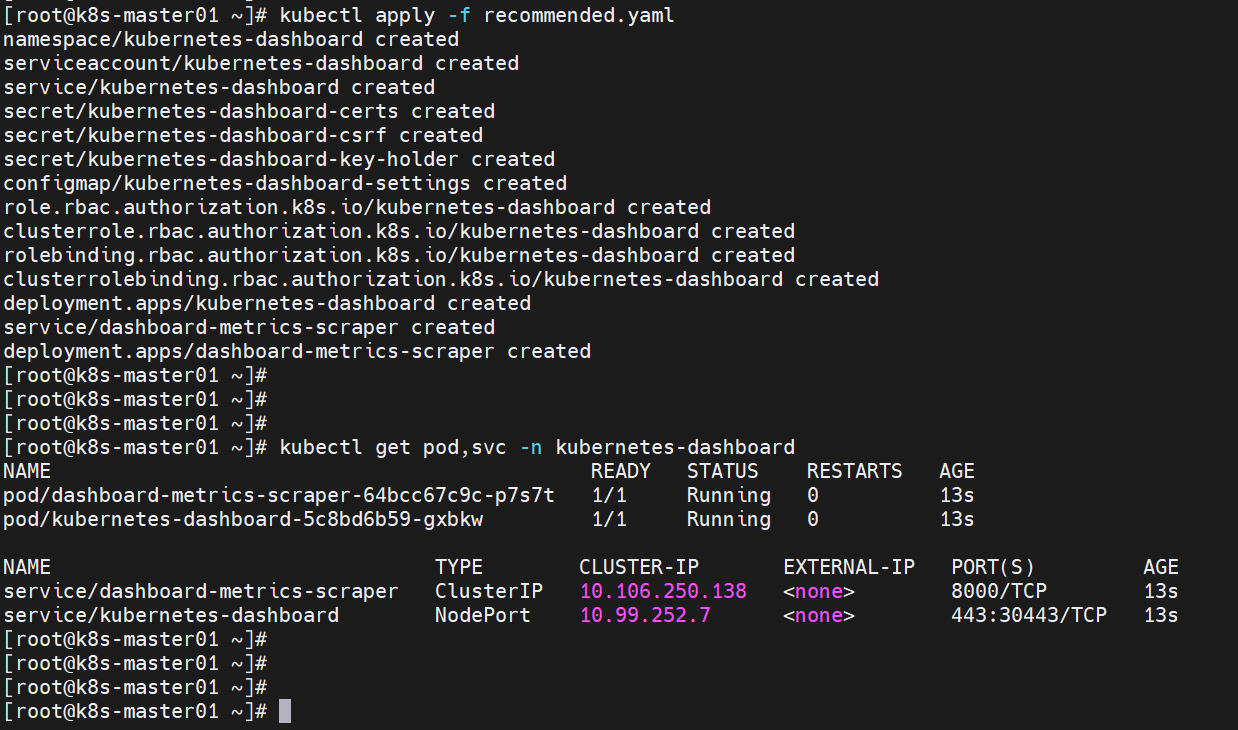

/ #八、部署Kubernetes Dashboard

部署Dashboard

Dashboard是官方提供的一个UI,可用于基本管理k8s资源

kubectl apply -f recommended.yaml

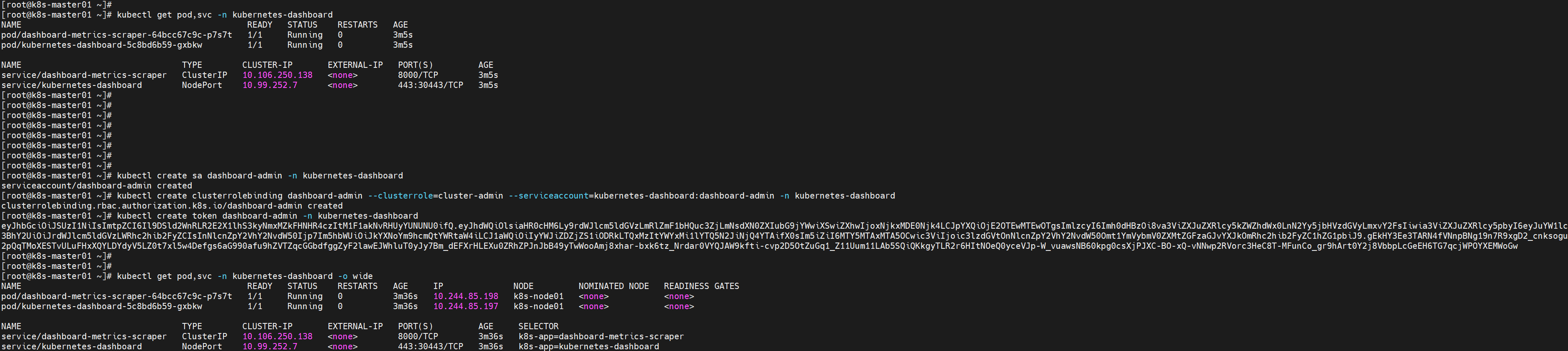

创建sa

创建sa并绑定默认cluster-admin管理集群角色:

kubectl create sa dashboard-admin -n kubernetes-dashboard

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:dashboard-admin -n kubernetes-dashboard

kubectl create token dashboard-admin -n kubernetes-dashboard

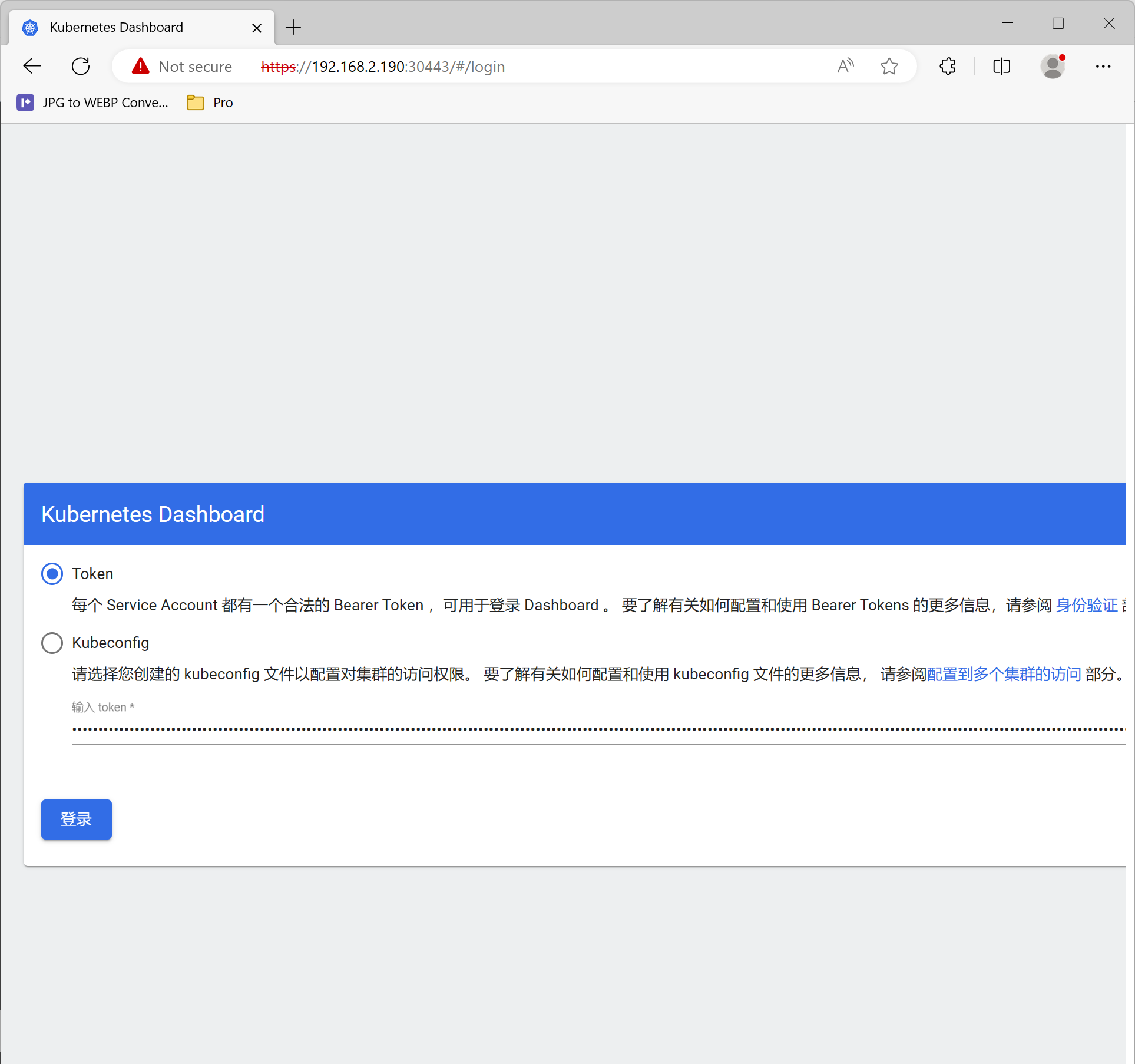

使用token进行登录

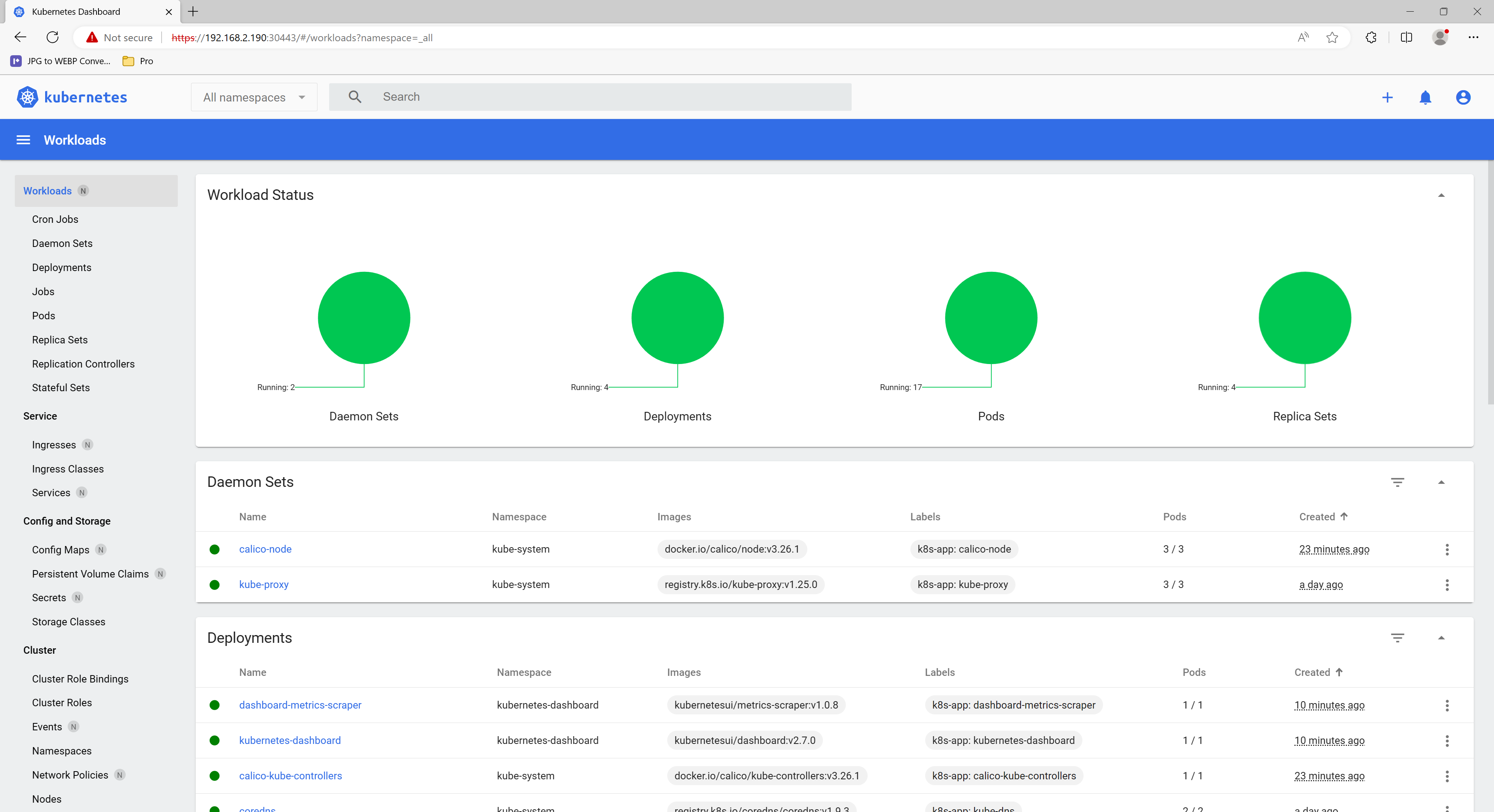

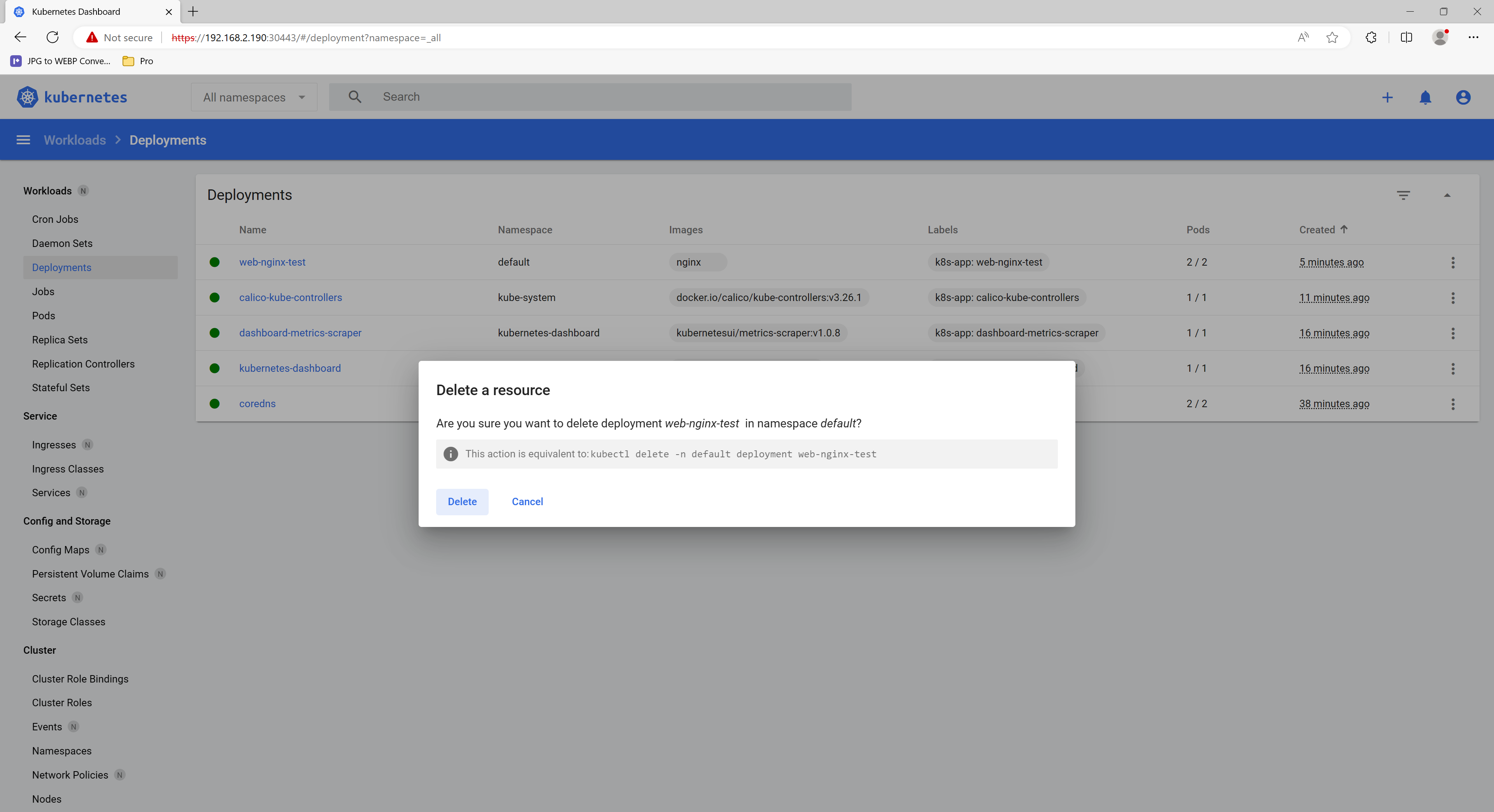

Workloads

点击workloads查看概览

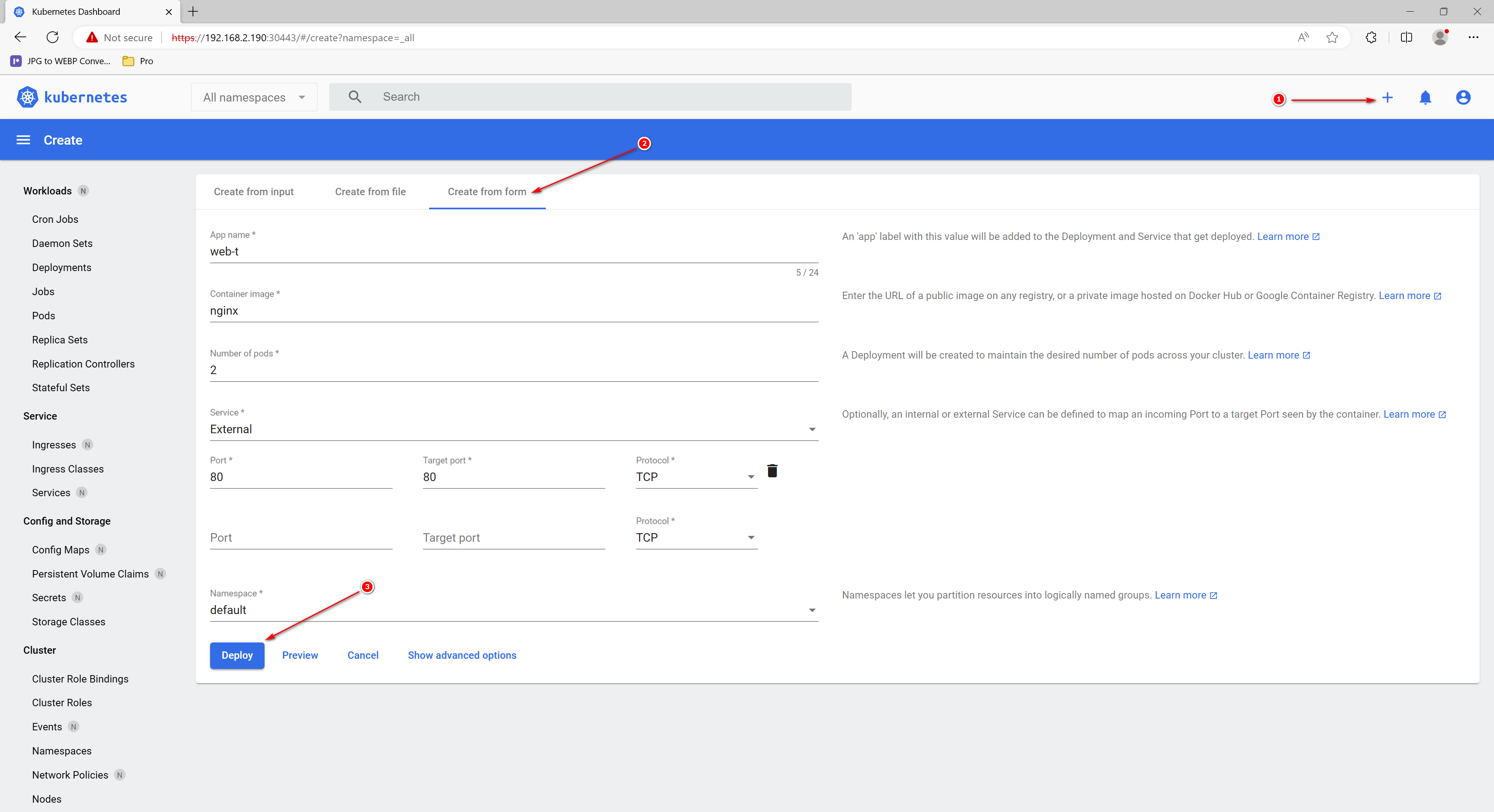

创建workloads

通过点击右侧的+号,点击“create from form”创建一个deployment具有此值的“app”标签将添加到部署的部署和服务中。

查看workloads状态

全部绿色表示deployment、pod、svc创建成功,

查看pod,svc

清除测试,点击右侧的更多,会提示命令行操作命令“kubectl delete -n default deployment web-t”

原创声明:本文系作者授权腾讯云开发者社区发表,未经许可,不得转载。

如有侵权,请联系 cloudcommunity@tencent.com 删除。

原创声明:本文系作者授权腾讯云开发者社区发表,未经许可,不得转载。

如有侵权,请联系 cloudcommunity@tencent.com 删除。