基于 KubeSphere 玩转 k8s 第二季|openEuler 22.03 基于 KubeSphere 安装 k8s v1.24 实战入门

原创基于 KubeSphere 玩转 k8s 第二季|openEuler 22.03 基于 KubeSphere 安装 k8s v1.24 实战入门

原创

大家好,欢迎来到运维有术

欢迎来到云原生运维实战系列之基于 KubeSphere 玩转 K8s 第二季

前言

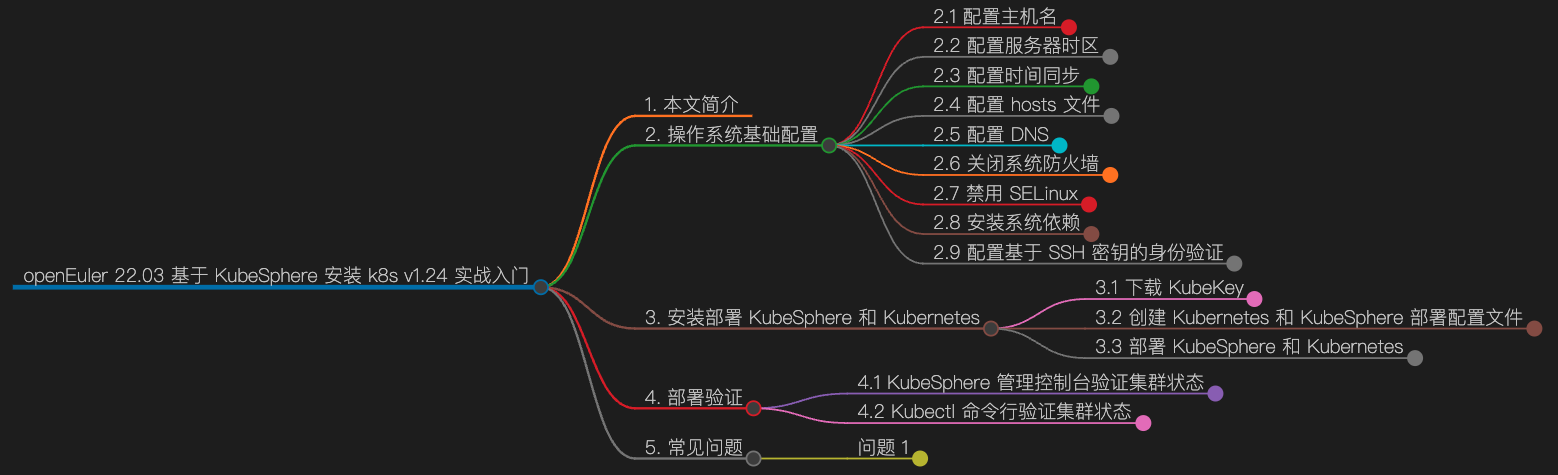

导图

知识量

- 阅读时长:20 分

- 行:928

- 单词:6000+

- 字符:44900+

- 图片:10 张

知识点

- 定级:入门级

- KubeKey 安装部署 KubeSphere 和 Kubernetes

- KubeSphere 管理控制台基本操作

- Kubernetes 基本操作

- openEuler 操作系统的基本配置

实战服务器配置(架构1:1复刻小规模生产环境,配置略有不同)

主机名 | IP | CPU | 内存 | 系统盘 | 数据盘 | 用途 |

|---|---|---|---|---|---|---|

ks-master-0 | 192.168.9.91 | 2 | 4 | 50 | 100 | KubeSphere/k8s-master |

ks-master-1 | 192.168.9.92 | 2 | 4 | 50 | 100 | KubeSphere/k8s-master |

ks-master-2 | 192.168.9.93 | 2 | 4 | 50 | 100 | KubeSphere/k8s-master |

ks-worker-0 | 192.168.9.95 | 2 | 4 | 50 | 100 | k8s-worker/CI |

ks-worker-1 | 192.168.9.96 | 2 | 4 | 50 | 100 | k8s-worker |

ks-worker-2 | 192.168.9.97 | 2 | 4 | 50 | 100 | k8s-worker |

storage-0 | 192.168.9.81 | 2 | 4 | 50 | 100+ | ElasticSearch/GlusterFS/Ceph/Longhorn/NFS/ |

storage-1 | 192.168.9.82 | 2 | 4 | 50 | 100+ | ElasticSearch/GlusterFS/Ceph/Longhorn |

storage-2 | 192.168.9.83 | 2 | 4 | 50 | 100+ | ElasticSearch/GlusterFS/Ceph/Longhorn |

registry | 192.168.9.80 | 2 | 4 | 50 | 200 | Sonatype Nexus 3 |

合计 | 10 | 20 | 40 | 500 | 1100+ |

实战环境涉及软件版本信息

- 操作系统:openEuler 22.03 LTS SP2 x86_64

- KubeSphere:v3.3.2

- Kubernetes:v1.24.12

- Containerd:1.6.4

- KubeKey: v3.0.8

1. 本文简介

本文是 openEuler 22.03 LTS SP2 基于 KubeSphere 安装 Kubernetes 的更新版。

变更原因及改动说明如下:

- 在后期的实战训练中发现 Kubernetes v1.26 版本过高导致不支持 GlusterFS 作为后端存储,最后支持的版本是 v1.25 系列。

- KubeKey 有了更新,官方发布了 v3.0.8 ,支持更多的 Kubernetes 版本。

- 综合考虑,我们选择 Kubernetes v1.24.12、KubeKey v3.0.8 更新我们的系列文档。

- 文档整体结构跟上一期一样,整体变化不大,只是细节略有差异。

我们将使用 KubeSphere 开发的 KubeKey 工具实现自动化部署,模拟真实的小规模生产环境在 6 台服务器上实现高可用模式部署 Kubernetes 集群和 KubeSphere。

我们将提供详细的部署说明,以便读者轻松地完成并掌握实战部署 KubeSphere 和 Kubernetes 集群。

2. 操作系统基础配置

本系列实战文档,为了贴近生产,选用了 3 Master 和 3 Worker 的部署架构,后面为了实战模拟集群新增节点,因此,初始化部署时,仅使用 3 Master 和 1 Worker。在实际使用中,请根据实际情况修改。

请注意,以下操作无特殊说明时需在所有 openEuler 服务器上执行。本文只选取 Master-0 节点作为演示,并假定其余服务器都已按照相同的方式进行配置和设置。

2.1 配置主机名

hostnamectl hostname ks-master-0注意:worker 节点的主机名前缀是 ks-worker-

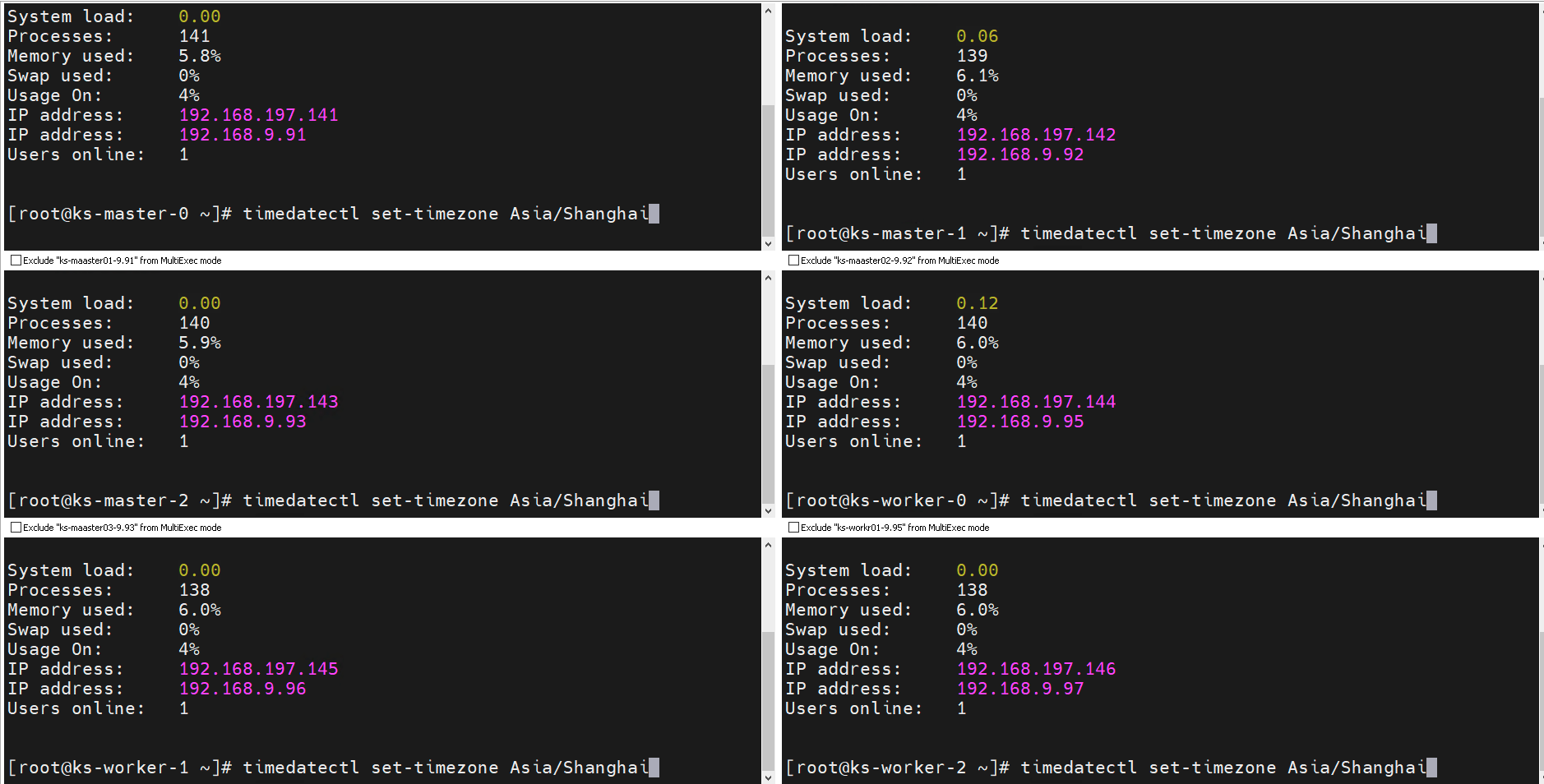

2.2 配置服务器时区

配置服务器时区为 Asia/Shanghai。

timedatectl set-timezone Asia/Shanghai验证服务器时区,正确配置如下。

[root@MiWiFi-RA67-srv ~]# timedatectl

Local time: Tue 2023-07-18 10:47:42 CST

Universal time: Tue 2023-07-18 02:47:42 UTC

RTC time: Tue 2023-07-18 02:47:42

Time zone: Asia/Shanghai (CST, +0800)

System clock synchronized: yes

NTP service: active

RTC in local TZ: no小技巧:多个机器执行同一条命令的时候,可以用终端工具的多开功能,这样能提高效率。当然啊,也可以按我们第一季介绍的 Ansible 工具(这一季为了照顾纯运维小白,取消了,改手撸了)。

2.3 配置时间同步

安装 chrony 作为时间同步软件。

yum install chrony 修改配置文件 /etc/chrony.conf,修改 ntp 服务器配置。

vi /etc/chrony.conf

# 删除所有的 pool 配置

pool pool.ntp.org iburst

# 增加国内的 ntp 服务器,或是指定其他常用的时间服务器

pool cn.pool.ntp.org iburst

# 上面的手工操作,也可以使用 sed 自动替换

sed -i 's/^pool pool.*/pool cn.pool.ntp.org iburst/g' /etc/chrony.conf重启并设置 chrony 服务开机自启动。

systemctl restart chronyd && systemctl enable chronyd验证 chrony 同步状态。

# 执行查看命令

chronyc sourcestats -v

# 正常的输出结果如下

[root@MiWiFi-RA67-srv ~]# chronyc sourcestats -v

.- Number of sample points in measurement set.

/ .- Number of residual runs with same sign.

| / .- Length of measurement set (time).

| | / .- Est. clock freq error (ppm).

| | | / .- Est. error in freq.

| | | | / .- Est. offset.

| | | | | | On the -.

| | | | | | samples. \

| | | | | | |

Name/IP Address NP NR Span Frequency Freq Skew Offset Std Dev

==============================================================================

ntp6.flashdance.cx 4 3 7 -1139.751 120420 +29ms 18ms

electrode.felixc.at 4 3 8 -880.764 10406.110 -18ms 1576us

ntp8.flashdance.cx 4 3 7 -3806.718 40650.566 -16ms 6936us

time.cloudflare.com 4 3 7 +346.154 30670.580 +30ms 4386us2.4 配置 hosts 文件

编辑 /etc/hosts 文件,将规划的服务器 IP 和主机名添加到文件中。

192.168.9.91 ks-master-0

192.168.9.92 ks-master-1

192.168.9.93 ks-master-2

192.168.9.95 ks-worker-02.5 配置 DNS

echo "nameserver 114.114.114.114" > /etc/resolv.conf2.6 关闭系统防火墙

systemctl stop firewalld && systemctl disable firewalld2.7 禁用 SELinux

openEuler 22.03 SP2 最小化安装的系统默认启用了 SELinux,为了减少麻烦,我们所有的节点都禁用 SELinux。

# 使用 sed 修改配置文件,实现彻底的禁用

sed -i 's/^SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

# 使用命令,实现临时禁用,这一步其实不做也行,KubeKey 会自动配置

setenforce 02.8 安装系统依赖

在所有节点上,以 root 用户登陆系统,执行下面的命令为 Kubernetes 安装系统基本依赖包。

# 安装 Kubernetes 系统依赖包

yum install curl socat conntrack ebtables ipset ipvsadm

# 安装其他必备包,openEuler 也是奇葩了,默认居然都不安装tar,不装的话后面会报错

yum install tar2.9 配置基于 SSH 密钥的身份验证

KubeKey 支持在自动化部署 KubeSphere 和 Kubernetes 服务时,利用密码和密钥作为远程服务器的连接验证方式。本文会演示同时使用密码和密钥的配置方式,因此,需要为部署用户 root 配置免密码 SSH身份验证。

注意:本小节为可选配置项,如果你使用纯密码的方式作为服务器远程连接认证方式,可以忽略本节内容。

本文将 master-0 节点作为部署节点,下面的操作仅需要在 master-0 节点操作。

以 root 用户登陆系统,然后使用 ssh-keygen 命令生成一个新的 SSH 密钥对,命令完成后,SSH 公钥和私钥将存储在 /root/.ssh 目录中。

ssh-keygen -t ed25519命令执行效果如下:

[root@ks-master-0 ~]# ssh-keygen -t ed25519

Generating public/private ed25519 key pair.

Enter file in which to save the key (/root/.ssh/id_ed25519):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_ed25519

Your public key has been saved in /root/.ssh/id_ed25519.pub

The key fingerprint is:

SHA256:1PjoUaqv7wrIKwQPvW6FiixzkB9ozv+vhIaYZUBtWrY root@MiWiFi-RA67-srv

The key's randomart image is:

+--[ED25519 256]--+

| .. |

|. = o |

|..= . o o |

|oo.E . = |

|.=oo S . |

|=B*.o o . |

|X*o=... . |

|*+*... . |

|.=o..o=*+ |

+----[SHA256]-----+接下来,输入以下命令将 SSH 公钥从 master-0 节点发送到其他节点。命令执行时输入 yes,以接受服务器的 SSH 指纹,然后在出现提示时输入 root 用户的密码。

ssh-copy-id root@ks-master-0

ssh-copy-id root@ks-master-1

ssh-copy-id root@ks-master-2

ssh-copy-id root@ks-worker-0下面是密钥复制时,正确的输出结果。

[root@ks-master-0 ~]# ssh-copy-id root@ks-master-0

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_ed25519.pub"

The authenticity of host 'ks-master-0 (192.168.9.91)' can't be established.

ED25519 key fingerprint is SHA256:46y96KewahNGKNhbrGWPkPW8Y662PIGQ4rIEw4SUwGE.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

Authorized users only. All activities may be monitored and reported.

root@ks-master-0's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@ks-master-0'"

and check to make sure that only the key(s) you wanted were added.添加并上传 SSH 公钥后,您现在可以执行下面的命令验证,通过 root 用户连接到所有服务器,无需密码验证。

[root@ks-master-0 ~]# ssh root@ks-master-0

# 登陆输出结果 略3. 安装部署 KubeSphere 和 Kubernetes

3.1 下载 KubeKey

本文将 master-0 节点作为部署节点,把 KubeKey (下文简称kk) 最新版(v3.0.8)二进制文件下载到该服务器。具体 kk 版本号可以在kk发行页面查看。

- 下载最新版的 KubeKey

cd ~

mkdir kubekey

cd kubekey/

# 选择中文区下载(访问 GitHub 受限时使用)

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | sh -

# 也可以使用下面的命令指定具体版本

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.8 sh -

# 正确的执行效果如下

[root@ks-master-0 ~]# cd ~

[root@ks-master-0 ~]# mkdir kubekey

[root@ks-master-0 ~]# cd kubekey/

[root@ks-master-0 kubekey]# export KKZONE=cn

[root@ks-master-0 kubekey]# curl -sfL https://get-kk.kubesphere.io | sh -

Downloading kubekey v3.0.8 from https://kubernetes.pek3b.qingstor.com/kubekey/releases/download/v3.0.8/kubekey-v3.0.8-linux-amd64.tar.gz ...

Kubekey v3.0.8 Download Complete!

[root@ks-master-0 kubekey]# ll

total 112048

-rwxr-xr-x. 1 root root 78944436 Jul 14 09:17 kk

-rw-r--r--. 1 root root 35788724 Jul 18 11:01 kubekey-v3.0.8-linux-amd64.tar.gz- 查看 KubeKey 支持的 Kubernetes 版本列表

./kk version --show-supported-k8s

[root@ks-master-0 kubekey]# ./kk version --show-supported-k8s

v1.19.0

v1.19.8

v1.19.9

v1.19.15

v1.20.4

v1.20.6

v1.20.10

v1.21.0

v1.21.1

v1.21.2

v1.21.3

v1.21.4

v1.21.5

v1.21.6

v1.21.7

v1.21.8

v1.21.9

v1.21.10

v1.21.11

v1.21.12

v1.21.13

v1.21.14

v1.22.0

v1.22.1

v1.22.2

v1.22.3

v1.22.4

v1.22.5

v1.22.6

v1.22.7

v1.22.8

v1.22.9

v1.22.10

v1.22.11

v1.22.12

v1.22.13

v1.22.14

v1.22.15

v1.22.16

v1.22.17

v1.23.0

v1.23.1

v1.23.2

v1.23.3

v1.23.4

v1.23.5

v1.23.6

v1.23.7

v1.23.8

v1.23.9

v1.23.10

v1.23.11

v1.23.12

v1.23.13

v1.23.14

v1.23.15

v1.23.16

v1.23.17

v1.24.0

v1.24.1

v1.24.2

v1.24.3

v1.24.4

v1.24.5

v1.24.6

v1.24.7

v1.24.8

v1.24.9

v1.24.10

v1.24.11

v1.24.12

v1.24.13

v1.24.14

v1.25.0

v1.25.1

v1.25.2

v1.25.3

v1.25.4

v1.25.5

v1.25.6

v1.25.7

v1.25.8

v1.25.9

v1.25.10

v1.26.0

v1.26.1

v1.26.2

v1.26.3

v1.26.4

v1.26.5

v1.27.0

v1.27.1

v1.27.2注意:输出结果为 KK 支持的结果,但不代表 KubeSphere 和其他 Kubernetes 也能完美支持,由于后期的实战还会涉及版本升级,综合考虑本文选择相对保守的 v1.24.12。

3.2 创建 Kubernetes 和 KubeSphere 部署配置文件

创建集群配置文件,本示例中,选择 KubeSphere v3.3.2 和 Kubernetes v1.24.12,同时,指定配置文件名称为 kubesphere-v3.3.2.yaml,如果不指定,默认的文件名为 config-sample.yaml。

./kk create config -f kubesphere-v3.3.2.yaml --with-kubernetes v1.24.12 --with-kubesphere v3.3.2命令执行成功后,在当前目录会生成文件名为 kubesphere-v3.3.2.yaml 的配置文件。

[root@ks-master-0 kubekey]# ./kk create config -f kubesphere-v3.3.2.yaml --with-kubernetes v1.24.12 --with-kubesphere v3.3.2

Generate KubeKey config file successfully

[root@ks-master-0 kubekey]# ll

total 112056

-rwxr-xr-x. 1 root root 78944436 Jul 14 09:17 kk

-rw-r--r--. 1 root root 35788724 Jul 18 11:01 kubekey-v3.0.8-linux-amd64.tar.gz

-rw-r--r--. 1 root root 4681 Jul 18 11:07 kubesphere-v3.3.2.yaml注意: 生成的默认配置文件内容较多,这里就不做过多展示了,大家可以自己查看。

本节示例采用 3 个节点作为 control-plane 节点,启用规划中的 1个 worker 节点。

编辑配置文件 kubesphere-v3.3.2.yaml,修改 hosts 和 roleGroups 等信息,修改说明如下。

- hosts:指定节点的 IP、ssh 用户、ssh 密码、ss h密钥,示例演示了同时使用密码和密钥的配置方法

- roleGroups:指定 ks-master-0、ks-master-1、ks-master-2 作为 etcd、control-plane、worker节点

- internalLoadbalancer: 启用内置的 HAProxy 负载均衡器

- domain:自定义了一个 opsman.top

- containerManager:使用了containerd

修改后的示例如下:

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: ks-master-0, address: 192.168.9.91, internalAddress: 192.168.9.91, user: root, password: "P@88w0rd"}

- {name: ks-master-1, address: 192.168.9.92, internalAddress: 192.168.9.92, user: root, privateKeyPath: "~/.ssh/id_ed25519"}

- {name: ks-master-2, address: 192.168.9.93, internalAddress: 192.168.9.93, user: root, privateKeyPath: "~/.ssh/id_ed25519"}

- {name: ks-worker-0, address: 192.168.9.95, internalAddress: 192.168.9.95, user: root, privateKeyPath: "~/.ssh/id_ed25519"}

roleGroups:

etcd:

- ks-master-0

- ks-master-1

- ks-master-2

control-plane:

- ks-master-0

- ks-master-1

- ks-master-2

worker:

- ks-worker-0

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

internalLoadbalancer: haproxy

domain: lb.opsman.top

address: ""

port: 6443

kubernetes:

version: v1.24.12

clusterName: opsman.top

autoRenewCerts: true

containerManager: containerd

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

privateRegistry: ""

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: []3.3 部署 KubeSphere 和 Kubernetes

接下来我们执行下面的命令,使用上面生成的配置文件部署 KubeSphere 和 Kubernetes。

./kk create cluster -f kubesphere-v3.3.2.yaml上面的命令执行后,首先 kk 会检查部署 Kubernetes 的依赖及其他详细要求。检查合格后,系统将提示您确认安装。输入 yes 并按 ENTER 继续部署。

[root@ks-master-0 kubekey]# ./kk create cluster -f kubesphere-v3.3.2.yaml

_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \| | | | '_ \ / _ \ \ / _ \ | | |

| |\ \ |_| | |_) | __/ |\ \ __/ |_| |

\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |

__/ |

|___/

11:10:43 CST [GreetingsModule] Greetings

11:10:43 CST message: [ks-worker-0]

Greetings, KubeKey!

11:10:43 CST message: [ks-master-0]

Greetings, KubeKey!

11:10:44 CST message: [ks-master-1]

Greetings, KubeKey!

11:10:44 CST message: [ks-master-2]

Greetings, KubeKey!

11:10:44 CST success: [ks-worker-0]

11:10:44 CST success: [ks-master-0]

11:10:44 CST success: [ks-master-1]

11:10:44 CST success: [ks-master-2]

11:10:44 CST [NodePreCheckModule] A pre-check on nodes

11:10:46 CST success: [ks-master-0]

11:10:46 CST success: [ks-master-2]

11:10:46 CST success: [ks-master-1]

11:10:46 CST success: [ks-worker-0]

11:10:46 CST [ConfirmModule] Display confirmation form

+-------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | ipvsadm | conntrack | chrony | docker | containerd | nfs client | ceph client | glusterfs client | time |

+-------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| ks-master-0 | y | y | y | y | y | y | y | y | y | | | | | | CST 11:10:46 |

| ks-master-1 | y | y | y | y | y | y | y | y | y | | | | | | CST 11:10:46 |

| ks-master-2 | y | y | y | y | y | y | y | y | y | | | | | | CST 11:10:46 |

| ks-worker-0 | y | y | y | y | y | y | y | y | y | | | | | | CST 11:10:46 |

+-------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

This is a simple check of your environment.

Before installation, ensure that your machines meet all requirements specified at

https://github.com/kubesphere/kubekey#requirements-and-recommendations

Continue this installation? [yes/no]:注意: 检查结果中我们可以看到 nfs client、ceph client、glusterfs client 3 个与存储有关的 client 显示没有安装,这个我们后期会在对接存储的实战中单独安装。

安装过程日志输出比较多,为了节省篇幅这里就不展示了。

部署完成需要大约10-30分钟左右,具体看网速和机器配置,本次部署完成耗时 22 分钟。

部署完成后,您应该会在终端上看到类似于下面的输出。提示部署完成的同时,输出中还会显示用户登陆 KubeSphere 的默认管理员用户和密码。

clusterconfiguration.installer.kubesphere.io/ks-installer created

11:25:03 CST skipped: [ks-master-2]

11:25:03 CST skipped: [ks-master-1]

11:25:03 CST success: [ks-master-0]

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://192.168.9.91:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 2023-07-18 11:33:50

#####################################################

11:33:53 CST skipped: [ks-master-2]

11:33:53 CST skipped: [ks-master-1]

11:33:53 CST success: [ks-master-0]

11:33:53 CST Pipeline[CreateClusterPipeline] execute successfully

Installation is complete.

Please check the result using the command:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f4. 部署验证

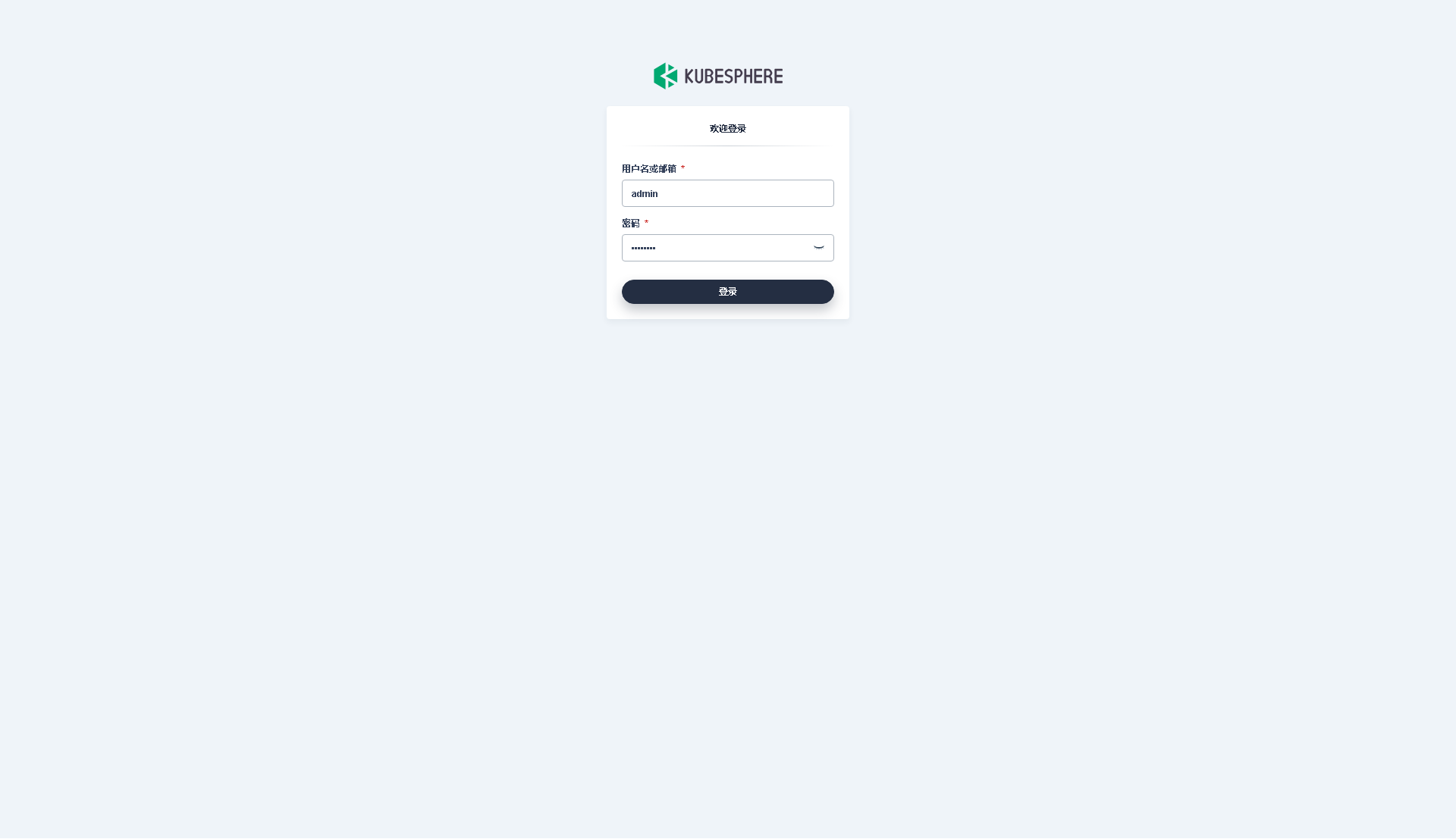

4.1 KubeSphere 管理控制台验证集群状态

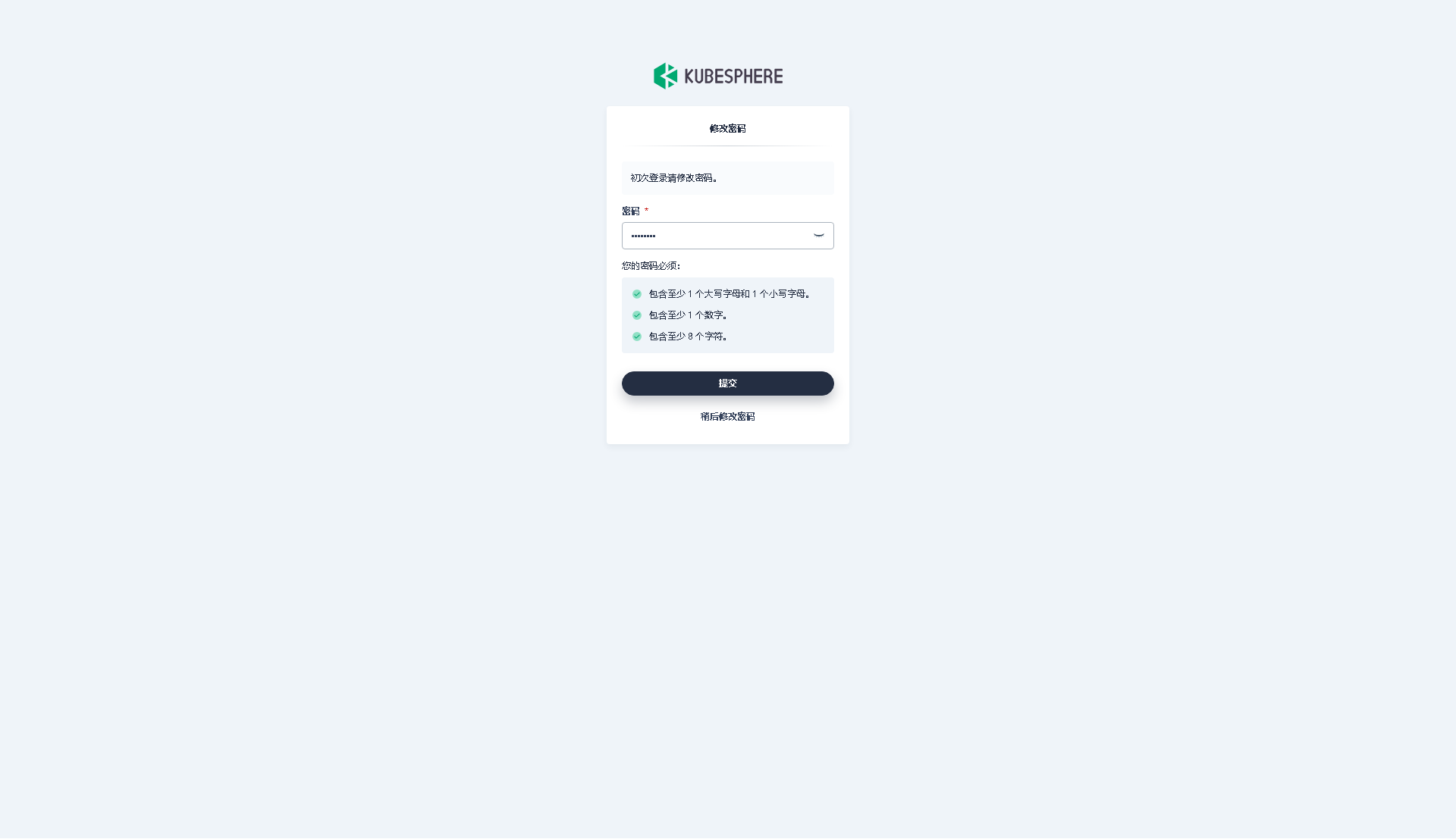

我们打开浏览器访问 master-0 节点的 IP 地址和端口 30880,可以看到 KubeSphere 管理控制台的登录页面。

输入默认用户 admin 和默认密码 P@88w0rd,然后点击「登录」。

登录后,系统会要求您更改 KubeSphere 默认用户 admin 的默认密码,输入新的密码并点击「提交」。

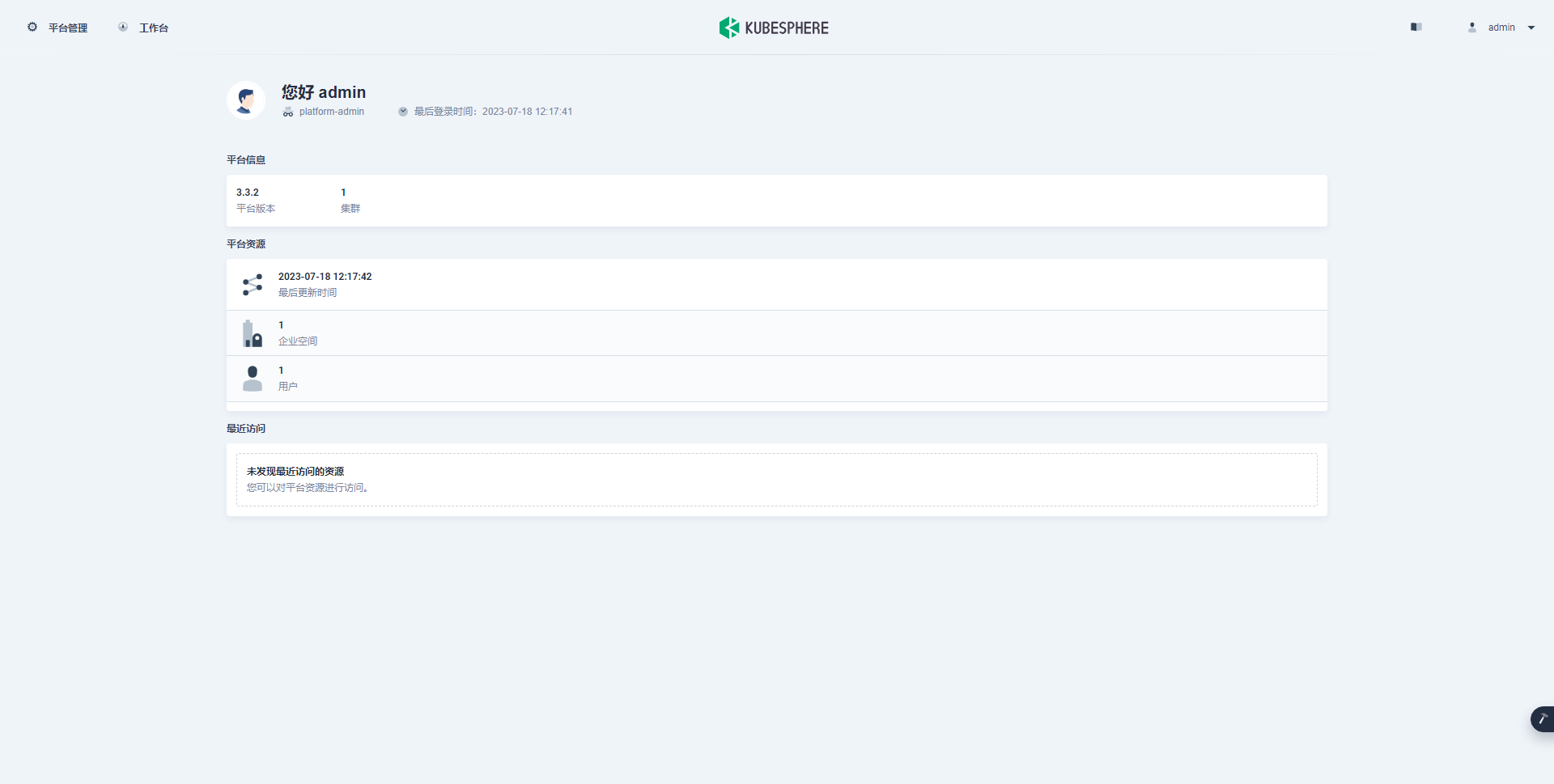

提交完成后,系统会跳转到 KubeSphere admin 用户工作台页面,该页面显示了当前 KubeSphere 版本为 v3.3.2,可用的 Kubernetes 集群数量为 1。

接下来,单击左上角的「平台管理」菜单,选择「集群管理」。

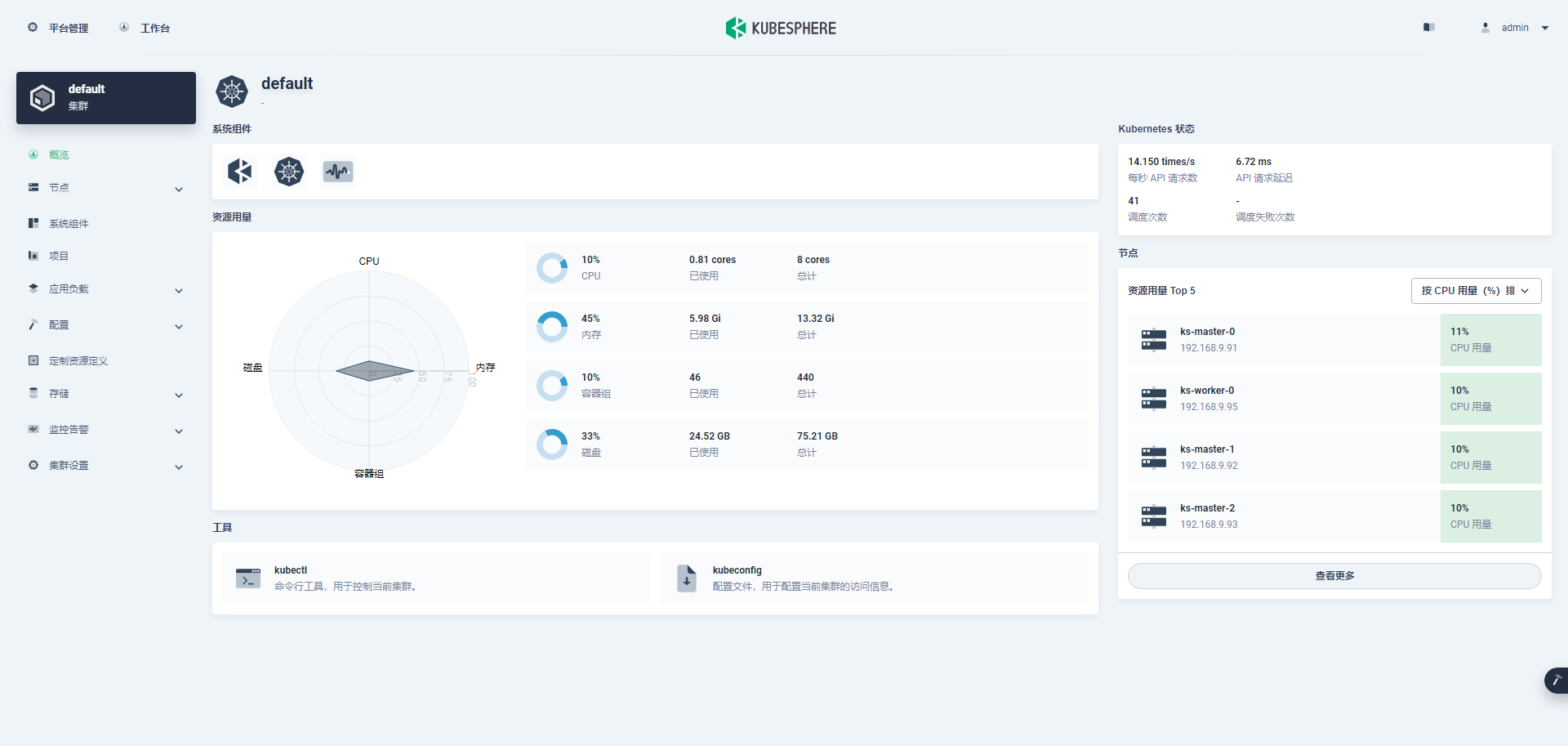

进入集群管理界面,在该页面可以查看集群的基本信息,包括集群资源用量、Kubernetes 状态、节点资源用量 Top、系统组件、工具箱等内容。

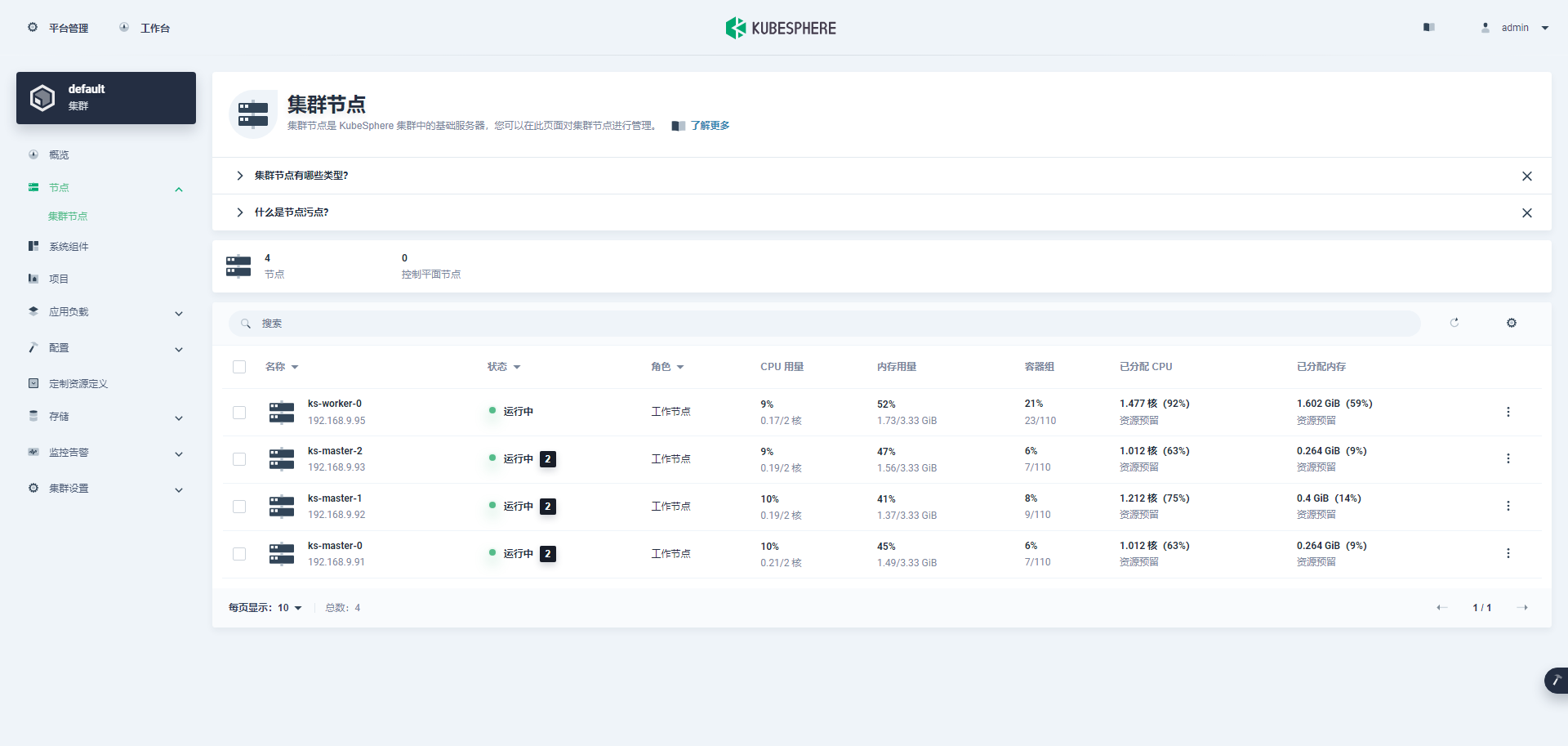

单击左侧「节点」菜单,点击「集群节点」可以查看 Kubernetes 集群可用节点的详细信息。

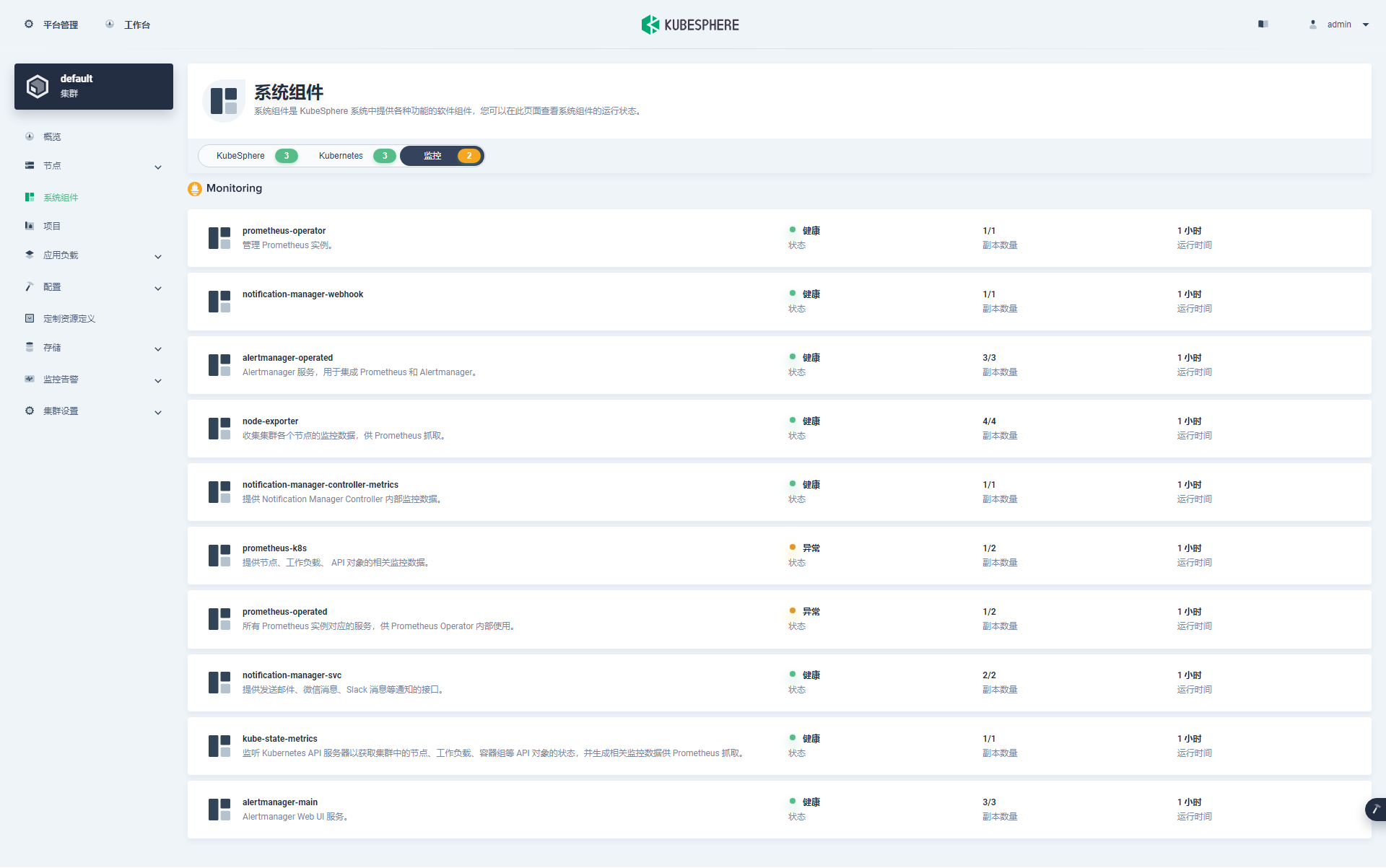

单击左侧「系统组件」菜单,可以查看已安装组件的详细信息。目前集群采用的最小化安装,仅包含 KubeSphere、Kubernetes、监控三个类别的组件。

特殊说明: 监控组件下的 prometheus-k8s 和 prometheus-operated 2 个组件处于异常状态,这个是由于只有一个 Worker 节点,第二个 prometheus-k8s-1 无法调度部署。后面加入新节点重建即可解决。

4.2 Kubectl 命令行验证集群状态

- 查看集群节点信息

在 master-0 节点运行 kubectl 命令获取 Kubernetes 集群上的可用节点列表。

kubectl get nodes -o wide在输出结果中可以看到,当前的 Kubernetes 集群有三个可用节点、节点的内部 IP、节点角色、节点的 Kubernetes 版本号、容器运行时及版本号、操作系统类型及内核版本等信息。

[root@ks-master-0 kubekey]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ks-master-0 Ready control-plane 35m v1.24.12 192.168.9.91 <none> openEuler 22.03 (LTS-SP2) 5.10.0-153.12.0.92.oe2203sp2.x86_64 containerd://1.6.4

ks-master-1 Ready control-plane 35m v1.24.12 192.168.9.92 <none> openEuler 22.03 (LTS-SP2) 5.10.0-153.12.0.92.oe2203sp2.x86_64 containerd://1.6.4

ks-master-2 Ready control-plane 35m v1.24.12 192.168.9.93 <none> openEuler 22.03 (LTS-SP2) 5.10.0-153.12.0.92.oe2203sp2.x86_64 containerd://1.6.4

ks-worker-0 Ready worker 34m v1.24.12 192.168.9.95 <none> openEuler 22.03 (LTS-SP2) 5.10.0-153.12.0.92.oe2203sp2.x86_64 containerd://1.6.4- 查看 Pod 列表

输入以下命令获取在 Kubernetes 集群上运行的 Pod 列表,按默认顺序排序。

kubectl get pods -o wide -A在输出结果中可以看到, Kubernetes 集群上有多个可用的命名空间 kube-system、kubesphere-control-system、kubesphere-monitoring-system 和 kubesphere-system,所有 pod 都在运行。

[root@ks-master-0 kubekey]# kubectl get pods -o wide -A

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-f9f9bbcc9-9x49n 1/1 Running 0 34m 10.233.115.2 ks-worker-0 <none> <none>

kube-system calico-node-kvfbg 1/1 Running 0 34m 192.168.9.95 ks-worker-0 <none> <none>

kube-system calico-node-kx4fz 1/1 Running 0 34m 192.168.9.91 ks-master-0 <none> <none>

kube-system calico-node-qx5qk 1/1 Running 0 34m 192.168.9.92 ks-master-1 <none> <none>

kube-system calico-node-rb2cf 1/1 Running 0 34m 192.168.9.93 ks-master-2 <none> <none>

kube-system coredns-f657fccfd-8lnd5 1/1 Running 0 35m 10.233.103.2 ks-master-1 <none> <none>

kube-system coredns-f657fccfd-vtlmx 1/1 Running 0 35m 10.233.103.1 ks-master-1 <none> <none>

kube-system haproxy-ks-worker-0 1/1 Running 0 35m 192.168.9.95 ks-worker-0 <none> <none>

kube-system kube-apiserver-ks-master-0 1/1 Running 0 35m 192.168.9.91 ks-master-0 <none> <none>

kube-system kube-apiserver-ks-master-1 1/1 Running 0 35m 192.168.9.92 ks-master-1 <none> <none>

kube-system kube-apiserver-ks-master-2 1/1 Running 0 35m 192.168.9.93 ks-master-2 <none> <none>

kube-system kube-controller-manager-ks-master-0 1/1 Running 0 35m 192.168.9.91 ks-master-0 <none> <none>

kube-system kube-controller-manager-ks-master-1 1/1 Running 0 35m 192.168.9.92 ks-master-1 <none> <none>

kube-system kube-controller-manager-ks-master-2 1/1 Running 0 35m 192.168.9.93 ks-master-2 <none> <none>

kube-system kube-proxy-728cs 1/1 Running 0 35m 192.168.9.92 ks-master-1 <none> <none>

kube-system kube-proxy-ndc62 1/1 Running 0 35m 192.168.9.93 ks-master-2 <none> <none>

kube-system kube-proxy-qdmkb 1/1 Running 0 35m 192.168.9.95 ks-worker-0 <none> <none>

kube-system kube-proxy-sk4hz 1/1 Running 0 35m 192.168.9.91 ks-master-0 <none> <none>

kube-system kube-scheduler-ks-master-0 1/1 Running 0 35m 192.168.9.91 ks-master-0 <none> <none>

kube-system kube-scheduler-ks-master-1 1/1 Running 0 35m 192.168.9.92 ks-master-1 <none> <none>

kube-system kube-scheduler-ks-master-2 1/1 Running 0 35m 192.168.9.93 ks-master-2 <none> <none>

kube-system nodelocaldns-5594x 1/1 Running 0 35m 192.168.9.92 ks-master-1 <none> <none>

kube-system nodelocaldns-d572z 1/1 Running 0 35m 192.168.9.95 ks-worker-0 <none> <none>

kube-system nodelocaldns-gnbg6 1/1 Running 0 35m 192.168.9.93 ks-master-2 <none> <none>

kube-system nodelocaldns-h4vmx 1/1 Running 0 35m 192.168.9.91 ks-master-0 <none> <none>

kube-system openebs-localpv-provisioner-7497b4c996-ngnv9 1/1 Running 0 34m 10.233.115.1 ks-worker-0 <none> <none>

kube-system snapshot-controller-0 1/1 Running 0 33m 10.233.115.4 ks-worker-0 <none> <none>

kubesphere-controls-system default-http-backend-587748d6b4-57zck 1/1 Running 0 31m 10.233.115.6 ks-worker-0 <none> <none>

kubesphere-controls-system kubectl-admin-5d588c455b-7bw75 1/1 Running 0 26m 10.233.115.19 ks-worker-0 <none> <none>

kubesphere-monitoring-system alertmanager-main-0 2/2 Running 0 28m 10.233.115.9 ks-worker-0 <none> <none>

kubesphere-monitoring-system alertmanager-main-1 2/2 Running 0 28m 10.233.115.10 ks-worker-0 <none> <none>

kubesphere-monitoring-system alertmanager-main-2 2/2 Running 0 28m 10.233.115.11 ks-worker-0 <none> <none>

kubesphere-monitoring-system kube-state-metrics-5b8dc5c5c6-9ng42 3/3 Running 0 29m 10.233.115.8 ks-worker-0 <none> <none>

kubesphere-monitoring-system node-exporter-79c6m 2/2 Running 0 29m 192.168.9.95 ks-worker-0 <none> <none>

kubesphere-monitoring-system node-exporter-b57bp 2/2 Running 0 29m 192.168.9.92 ks-master-1 <none> <none>

kubesphere-monitoring-system node-exporter-t9vrm 2/2 Running 0 29m 192.168.9.91 ks-master-0 <none> <none>

kubesphere-monitoring-system node-exporter-vm9cq 2/2 Running 0 29m 192.168.9.93 ks-master-2 <none> <none>

kubesphere-monitoring-system notification-manager-deployment-6f8c66ff88-mqmxx 2/2 Running 0 27m 10.233.115.16 ks-worker-0 <none> <none>

kubesphere-monitoring-system notification-manager-deployment-6f8c66ff88-pjm79 2/2 Running 0 27m 10.233.115.15 ks-worker-0 <none> <none>

kubesphere-monitoring-system notification-manager-operator-6455b45546-kgdpf 2/2 Running 0 28m 10.233.115.13 ks-worker-0 <none> <none>

kubesphere-monitoring-system prometheus-k8s-0 2/2 Running 0 28m 10.233.115.14 ks-worker-0 <none> <none>

kubesphere-monitoring-system prometheus-k8s-1 0/2 Pending 0 28m <none> <none> <none> <none>

kubesphere-monitoring-system prometheus-operator-66d997dccf-zfdf5 2/2 Running 0 29m 10.233.115.7 ks-worker-0 <none> <none>

kubesphere-system ks-apiserver-7ddfccbb94-kd7tg 1/1 Running 0 31m 10.233.115.18 ks-worker-0 <none> <none>

kubesphere-system ks-console-7f88c4fd8d-b4wdr 1/1 Running 0 31m 10.233.115.5 ks-worker-0 <none> <none>

kubesphere-system ks-controller-manager-6cd89786dc-4xnhq 1/1 Running 0 31m 10.233.115.17 ks-worker-0 <none> <none>

kubesphere-system ks-installer-559fc4b544-pcdrn 1/1 Running 0 34m 10.233.115.3 ks-worker-0 <none> <none>特殊说明: 因为只有一个 Worker 节点,受限于调度策略,命名空间 kubesphere-monitoring-system 下的 prometheus-k8s-1 处于 Pending状态,这个暂时忽略,后面加入新节点重建即可解决。

输入以下命令获取在 Kubernetes 集群上运行的 Pod 列表,按工作负载在 NODE 上的分布排序。

kubectl get pods -o wide -A | sort -k 8在输出结果中可以看到, Worker 节点上的负载是最多的,kube-system 命名空间之外的负载都放在了 Worker上。

[root@ks-master-0 kubekey]# kubectl get pods -o wide -A | sort -k 8

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kubesphere-monitoring-system node-exporter-ccxbp 2/2 Running 0 147m 192.168.9.91 ks-master-0 <none> <none>

kubesphere-monitoring-system node-exporter-t9vrm 2/2 Running 0 29m 192.168.9.91 ks-master-0 <none> <none>

kube-system calico-node-kx4fz 1/1 Running 0 35m 192.168.9.91 ks-master-0 <none> <none>

kube-system kube-apiserver-ks-master-0 1/1 Running 0 36m 192.168.9.91 ks-master-0 <none> <none>

kube-system kube-controller-manager-ks-master-0 1/1 Running 0 36m 192.168.9.91 ks-master-0 <none> <none>

kube-system kube-proxy-sk4hz 1/1 Running 0 35m 192.168.9.91 ks-master-0 <none> <none>

kube-system kube-scheduler-ks-master-0 1/1 Running 0 36m 192.168.9.91 ks-master-0 <none> <none>

kube-system nodelocaldns-h4vmx 1/1 Running 0 36m 192.168.9.91 ks-master-0 <none> <none>

kubesphere-monitoring-system node-exporter-b57bp 2/2 Running 0 29m 192.168.9.92 ks-master-1 <none> <none>

kube-system calico-node-qx5qk 1/1 Running 0 35m 192.168.9.92 ks-master-1 <none> <none>

kube-system coredns-f657fccfd-8lnd5 1/1 Running 0 36m 10.233.103.2 ks-master-1 <none> <none>

kube-system coredns-f657fccfd-vtlmx 1/1 Running 0 36m 10.233.103.1 ks-master-1 <none> <none>

kube-system kube-apiserver-ks-master-1 1/1 Running 0 35m 192.168.9.92 ks-master-1 <none> <none>

kube-system kube-controller-manager-ks-master-1 1/1 Running 0 35m 192.168.9.92 ks-master-1 <none> <none>

kube-system kube-proxy-728cs 1/1 Running 0 35m 192.168.9.92 ks-master-1 <none> <none>

kube-system kube-scheduler-ks-master-1 1/1 Running 0 35m 192.168.9.92 ks-master-1 <none> <none>

kube-system nodelocaldns-5594x 1/1 Running 0 35m 192.168.9.92 ks-master-1 <none> <none>

kubesphere-monitoring-system node-exporter-vm9cq 2/2 Running 0 29m 192.168.9.93 ks-master-2 <none> <none>

kube-system calico-node-rb2cf 1/1 Running 0 35m 192.168.9.93 ks-master-2 <none> <none>

kube-system kube-apiserver-ks-master-2 1/1 Running 0 35m 192.168.9.93 ks-master-2 <none> <none>

kube-system kube-controller-manager-ks-master-2 1/1 Running 0 35m 192.168.9.93 ks-master-2 <none> <none>

kube-system kube-proxy-ndc62 1/1 Running 0 35m 192.168.9.93 ks-master-2 <none> <none>

kube-system kube-scheduler-ks-master-2 1/1 Running 0 35m 192.168.9.93 ks-master-2 <none> <none>

kube-system nodelocaldns-gnbg6 1/1 Running 0 35m 192.168.9.93 ks-master-2 <none> <none>

kubesphere-controls-system default-http-backend-587748d6b4-57zck 1/1 Running 0 31m 10.233.115.6 ks-worker-0 <none> <none>

kubesphere-monitoring-system alertmanager-main-0 2/2 Running 0 29m 10.233.115.9 ks-worker-0 <none> <none>

kubesphere-monitoring-system kube-state-metrics-5b8dc5c5c6-9ng42 3/3 Running 0 29m 10.233.115.8 ks-worker-0 <none> <none>

kubesphere-monitoring-system node-exporter-79c6m 2/2 Running 0 29m 192.168.9.95 ks-worker-0 <none> <none>

kubesphere-monitoring-system prometheus-operator-66d997dccf-zfdf5 2/2 Running 0 29m 10.233.115.7 ks-worker-0 <none> <none>

kubesphere-system ks-console-7f88c4fd8d-b4wdr 1/1 Running 0 31m 10.233.115.5 ks-worker-0 <none> <none>

kubesphere-system ks-installer-559fc4b544-pcdrn 1/1 Running 0 35m 10.233.115.3 ks-worker-0 <none> <none>

kube-system calico-kube-controllers-f9f9bbcc9-9x49n 1/1 Running 0 35m 10.233.115.2 ks-worker-0 <none> <none>

kube-system calico-node-kvfbg 1/1 Running 0 35m 192.168.9.95 ks-worker-0 <none> <none>

kube-system haproxy-ks-worker-0 1/1 Running 0 35m 192.168.9.95 ks-worker-0 <none> <none>

kube-system kube-proxy-qdmkb 1/1 Running 0 35m 192.168.9.95 ks-worker-0 <none> <none>

kube-system nodelocaldns-d572z 1/1 Running 0 35m 192.168.9.95 ks-worker-0 <none> <none>

kube-system openebs-localpv-provisioner-7497b4c996-ngnv9 1/1 Running 0 35m 10.233.115.1 ks-worker-0 <none> <none>

kube-system snapshot-controller-0 1/1 Running 0 33m 10.233.115.4 ks-worker-0 <none> <none>

kubesphere-controls-system kubectl-admin-5d588c455b-7bw75 1/1 Running 0 26m 10.233.115.19 ks-worker-0 <none> <none>

kubesphere-monitoring-system alertmanager-main-1 2/2 Running 0 29m 10.233.115.10 ks-worker-0 <none> <none>

kubesphere-monitoring-system alertmanager-main-2 2/2 Running 0 29m 10.233.115.11 ks-worker-0 <none> <none>

kubesphere-monitoring-system notification-manager-deployment-6f8c66ff88-mqmxx 2/2 Running 0 27m 10.233.115.16 ks-worker-0 <none> <none>

kubesphere-monitoring-system notification-manager-deployment-6f8c66ff88-pjm79 2/2 Running 0 27m 10.233.115.15 ks-worker-0 <none> <none>

kubesphere-monitoring-system notification-manager-operator-6455b45546-kgdpf 2/2 Running 0 29m 10.233.115.13 ks-worker-0 <none> <none>

kubesphere-monitoring-system prometheus-k8s-0 2/2 Running 0 29m 10.233.115.14 ks-worker-0 <none> <none>

kubesphere-system ks-apiserver-7ddfccbb94-kd7tg 1/1 Running 0 31m 10.233.115.18 ks-worker-0 <none> <none>

kubesphere-system ks-controller-manager-6cd89786dc-4xnhq 1/1 Running 0 31m 10.233.115.17 ks-worker-0 <none> <none>

kubesphere-monitoring-system prometheus-k8s-1 0/2 Pending 0 29m <none> <none> <none> <none>注意:实战环境为了模拟生产新增节点,所以初始只加入了一个 Worker 节点,所以负载较高,且 prometheus-k8s-1 处于 Pending 状态,实际使用中建议直接将所有 Worker 一起加入集群。

- 查看 Image 列表

输入以下命令获取在 Kubernetes 集群节点上已经下载的 Image 列表。

crictl images ls在 Master 和 Worker 节点分别执行,输出结果如下:

# Master 节点

[root@ks-master-0 kubekey]# crictl images ls

IMAGE TAG IMAGE ID SIZE

registry.cn-beijing.aliyuncs.com/kubesphereio/cni v3.23.2 a87d3f6f1b8fd 111MB

registry.cn-beijing.aliyuncs.com/kubesphereio/coredns 1.8.6 a4ca41631cc7a 13.6MB

registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache 1.15.12 5340ba194ec91 42.1MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver v1.24.12 2d5d51b77357e 34.1MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager v1.24.12 d30605171488d 31.3MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers v3.23.2 ec95788d0f725 56.4MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy v1.24.12 562ccc25ea629 39.6MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy v0.11.0 29589495df8d9 19.2MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler v1.24.12 cfa5d12eaa131 15.7MB

registry.cn-beijing.aliyuncs.com/kubesphereio/node-exporter v1.3.1 1dbe0e9319764 10.3MB

registry.cn-beijing.aliyuncs.com/kubesphereio/node v3.23.2 a3447b26d32c7 77.8MB

registry.cn-beijing.aliyuncs.com/kubesphereio/pause 3.7 221177c6082a8 311kB

registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol v3.23.2 b21e2d7408a79 8.67MB

# Worker 节点

[root@ks-worker-0 ~]# crictl images ls

IMAGE TAG IMAGE ID SIZE

registry.cn-beijing.aliyuncs.com/kubesphereio/alertmanager v0.23.0 ba2b418f427c0 26.5MB

registry.cn-beijing.aliyuncs.com/kubesphereio/cni v3.23.2 a87d3f6f1b8fd 111MB

registry.cn-beijing.aliyuncs.com/kubesphereio/coredns 1.8.6 a4ca41631cc7a 13.6MB

registry.cn-beijing.aliyuncs.com/kubesphereio/defaultbackend-amd64 1.4 846921f0fe0e5 1.82MB

registry.cn-beijing.aliyuncs.com/kubesphereio/haproxy 2.3 0ea9253dad7c0 38.5MB

registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache 1.15.12 5340ba194ec91 42.1MB

registry.cn-beijing.aliyuncs.com/kubesphereio/ks-apiserver v3.3.2 37a47a554cae2 68.3MB

registry.cn-beijing.aliyuncs.com/kubesphereio/ks-console v3.3.2 684e2ec7ee7c1 38.7MB

registry.cn-beijing.aliyuncs.com/kubesphereio/ks-controller-manager v3.3.2 ce4308712bea2 63MB

registry.cn-beijing.aliyuncs.com/kubesphereio/ks-installer v3.3.2 d4c00015d9614 154MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers v3.23.2 ec95788d0f725 56.4MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy v1.24.12 562ccc25ea629 39.6MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy v0.11.0 29589495df8d9 19.2MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy v0.8.0 ad393d6a4d1b1 20MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kube-state-metrics v2.5.0 b781b8478c274 11.9MB

registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl v1.22.0 30c7baa8e18c0 26.6MB

registry.cn-beijing.aliyuncs.com/kubesphereio/linux-utils 3.3.0 e88cfb3a763b9 26.9MB

registry.cn-beijing.aliyuncs.com/kubesphereio/node-exporter v1.3.1 1dbe0e9319764 10.3MB

registry.cn-beijing.aliyuncs.com/kubesphereio/node v3.23.2 a3447b26d32c7 77.8MB

registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager-operator v1.4.0 08ca8def2520f 19.3MB

registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager v1.4.0 b8b2f6b3790fe 21.7MB

registry.cn-beijing.aliyuncs.com/kubesphereio/notification-tenant-sidecar v3.2.0 4b47c43ec6ab6 14.7MB

registry.cn-beijing.aliyuncs.com/kubesphereio/pause 3.7 221177c6082a8 311kB

registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol v3.23.2 b21e2d7408a79 8.67MB

registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-config-reloader v0.55.1 7c63de88523a9 4.84MB

registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-operator v0.55.1 b30c215b787f5 14.3MB

registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus v2.34.0 e3cf894a63f55 78.1MB

registry.cn-beijing.aliyuncs.com/kubesphereio/provisioner-localpv 3.3.0 739e82fed8b2c 28.8MB

registry.cn-beijing.aliyuncs.com/kubesphereio/snapshot-controller v4.0.0 f1d8a00ae690f 19MB注意:Worker 节点的 Image 较多,因为该节点运行了除 K8S 核心服务之外的其他服务。

至此,我们已经部署了具有三个 Master 节点和一个 Worker 节点的最小化的 Kubernetes 集群和 KubeSphere。我们还通过 KubeSphere 管理控制台和命令行界面查看了集群的状态。

5. 常见问题

问题 1

报错现象

kubesphere-monitoring-system 命名空间下的 prometheus-k8s-1 处于 Pending状态

# 使用命令查看报错详情

$ kubectl describe pod prometheus-k8s-1 -n kubesphere-monitoring-system

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 2m35s default-scheduler 0/4 nodes are available: 1 Insufficient cpu, 3 node(s) had untolerated taint {node-role.kubernetes.io/master: }. preemption: 0/4 nodes are available: 1 No preemption victims found for incoming pod, 3 Preemption is not helpful for scheduling.解决方案

增加 Worker 节点后重建结束语

本文主要实战演示了在 openEuler 22.03 LTS SP2 服务器上利用 KubeKey 自动化部署 KubeSphere 和 Kubernetes 高可用集群的详细过程。

部署完成后,我们还利用 KubeSphere 管理控制台和 kubectl 命令行,查看并验证了 KubeSphere 和 Kubernetes 集群的状态。

下一期,我们会实战演示如何将新的节点加入已有集群,请持续关注。

基于 KubeSphere 玩转 K8S 第二季系列文档,是运维有术推出的基于 KubeSphere 玩转 K8S 第二季实战训练营的实战文档。

想获取更多的 KubeSphere、Kubernetes、云原生运维实战技能,请持续关注我,也可以直接加入我的知识星球。

如果你喜欢本文,请分享给你的小伙伴!

本系列文档内容涵盖 (但不限于) 以下技术领域:

- KubeSphere

- Kubernetes

- Ansible

- 自动化运维

- CNCF 技术栈

Get 文档/代码

- 知识星球-运维有术

版权声明

- 所有内容均属于原创,整理不易,感谢收藏,转载请标明出处。

About Me

- 昵称:无名运维侠

- 职业:自由职业者

- 微信:运维有术(公号菜单/与我联系)

- 服务的领域:云计算 / 云原生技术运维,自动化运维,大数据

- 技能标签:OpenStack、Kubernetes、KubeSphere、Ansible、Python、Go、DevOps、CNCF

原创声明:本文系作者授权腾讯云开发者社区发表,未经许可,不得转载。

如有侵权,请联系 cloudcommunity@tencent.com 删除。

原创声明:本文系作者授权腾讯云开发者社区发表,未经许可,不得转载。

如有侵权,请联系 cloudcommunity@tencent.com 删除。