本最佳实践的软件环境要求如下:

应用环境:

①容器服务ACK基于专有云V3.10.0版本。

②公共云云企业网服务CEN。

③公共云弹性伸缩组服务ESS。

配置条件:

1)使用专有云的容器服务或者在ECS上手动部署敏捷PaaS。

2)开通云专线,打通容器服务所在VPC与公共云上的VPC。

3)开通公共云弹性伸缩组服务(ESS)。

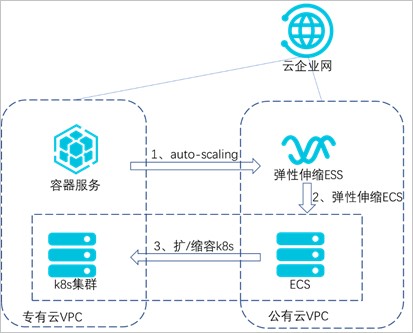

本实践基于K8s的业务集群运行在专有云上,对测试业务进行压力测试,主要基于以下三种产品和能力:

①利用阿里云的云企业网专线打通专有云和公共云,实现两朵云上VPC网络互通。

②利用K8s(Kubernetes)的HPA能力,实现容器的水平伸缩。

③利用K8s的Cluster Autoscaler和阿里云弹性伸缩组ESS能力实现节点的自动伸缩。

HPA(Horizontal Pod Autoscaler)是K8s的一种资源对象,能够根据CPU、内存等指标对statefulset、deployment等对象中的pod数量进行动态伸缩,使运行在上面的服务对指标的变化有一定的自适应能力。

当被测试业务指标达到上限时,触发HPA自动扩容业务pod;当业务集群无法承载更多pod时,触发公共云的ESS服务,在公共云内扩容出ECS并自动添加到专有云的K8s集群。

图 1:架构原理图

本示例创建了一个支持HPA的nginx应用,创建成功后,当Pod的利用率超过本例中设置的20%利用率时,则会进行水平扩容,低于20%的时候会进行缩容。

1.若使用自建K8s集群,则通过yaml文件配置HPA1)创建一个nginx应用,必须为应用设置request值,否则HPA不会生效。

apiVersion:

app/v1beta2

kind: Deployment

spec:

template:

metadata:

creationTimestamp: null

labels:

app: hpa-test

spec:

dnsPolicy: ClusterFirst

terminationGracePeriodSeconds:30

containers:

image: '192.168.**.***:5000/admin/hpa-example:v1'

imagePullPolicy: IfNotPresent

terminationMessagePolicy:File

terminationMessagePath:/dev/termination-log

name: hpa-test

resources:

requests:

cpu: //必须设置request值

securityContext: {}

restartPolicy:Always

schedulerName:default-scheduler

replicas: 1

selector:

matchLabels:

app: hpa-test

revisionHistoryLimit: 10

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

progressDeadlineSeconds: 6002)创建HPA。

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

annotations:

autoscaling.alpha.kubernetes.io/conditions:'[{"type":"AbleToScale","status":"True","lastTransitionTime":"2020-04-29T06:57:28Z","reason":"ScaleDownStabilized","message":"recent

recommendations were higher than current one, applying the highest recent

recommendation"},{"type":"ScalingActive","status":"True","lastTransitionTime":"2020-04-29T06:57:28Z","reason":"ValidMetricFound","message":"theHPA

was able to successfully calculate a replica count from cpu resource

utilization(percentage of

request)"},{"type":"ScalingLimited","status":"False","lastTransitionTime":"2020-04-29T06:57:28Z","reason":"DesiredWithinRange","message":"thedesired

count is within the acceptable range"}]'

autoscaling.alpha.kubernetes.io/currentmetrics:'[{"type":"Resource","resource":{"name":"cpu","currentAverageUtilization":0,"currentAverageValue":"0"}}]'

creationTimestamp: 2020-04-29T06:57:13Z

name: hpa-test

namespace: default

resourceVersion: "3092268"

selfLink:

/apis/autoscaling/v1/namespaces/default/horizontalpodautoscalers/hpa01

uid: a770ca26-89e6-11ea-a7d7-00163e0106e9

spec:

maxReplicas: //设置pod数量

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1beta2

kind: Deployment

name: centos

targetCPUUtilizationPercentage://设置CPU阈值2.若使用阿里云容器服务,需要在部署应用时选择配置HPA

图2:访问设置

资源请求(Request)的正确、合理设置,是弹性伸缩的前提条件。节点自动伸缩组件基于K8s资源调度的分配情况进行伸缩判断,节点中资源的分配通过资源请(Request)进行计算。

当Pod由于资源请求(Request)无法满足并进入等待(Pending)状态时,节点自动伸缩组件会根据弹性伸缩组配置信息中的资源规格以及约束配置,计算所需的节点数目。

如果可以满足伸缩条件,则会触发伸缩组的节点加入。而当一个节点在弹性伸缩组中且节点上Pod的资源请求低于阈值时,节点自动伸缩组件会将节点进行缩容。

1.配置弹性伸缩组ESS1)创建ESS弹性伸缩组,记录最小实例数和最大实例数。

图3:修改伸缩组

2)创建伸缩配置,记录伸缩配置的id。

图4:伸缩配置

#!/bin/sh

yum install -y ntpdate ntpdate -u ntp1.aliyun.com curl http:// example.com/public/hybrid/attach_local_node_aliyun.sh | bash -s -- --docker-version 17.06.2-ce-3 --token

9s92co.y2gkocbumal4fz1z --endpoint 192.168.**.***:6443 --cluster-dns 10.254.**.**

--region cn-huhehaote

echo "{" /etc/docker/daemon.json

echo "\"registry-mirrors\": ["

/etc/docker/daemon.json

echo "\"https://registry-vpc.cn-huhehaote.aliyuncs.com\"" /etc/docker/daemon.json

echo "]," /etc/docker/daemon.json

echo "\"insecure-registries\": [\"https://192.168.**.***:5000\"]" /etc/docker/daemon.json

echo "}" /etc/docker/daemon.json

systemctl restart docker 2.K8s集群部署autoscalerkubectl apply -f ca.yml

参考ca.yml创建autoscaler,注意修改如下配置与实际环境相对应。

access-key-id: "TFRBSWlCSFJyeHd2QXZ6****" access-key-secret: "bGIyQ3NuejFQOWM0WjFUNjR4WTVQZzVPRXND****" region-id: "Y24taHVoZWhh****"

ca.yal代码如下:

--- apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-addon: cluster-autoscaler.addons.k8s.io k8s-app: cluster-autoscaler name: cluster-autoscaler namespace: kube-system apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: cluster-autoscaler labels: k8s-addon: cluster-autoscaler.addons.k8s.io k8s-app: cluster-autoscaler rules: - apiGroups: [""] resources: ["events","endpoints"] verbs: ["create", "patch"] - apiGroups: [""] resources: ["pods/eviction"] verbs: ["create"] - apiGroups: [""] resources: ["pods/status"] verbs: ["update"] - apiGroups: [""] resources: ["endpoints"] resourceNames: ["cluster-autoscaler"] verbs: ["get","update"] - apiGroups: [""] resources: ["nodes"] verbs: ["watch","list","get","update"] - apiGroups: [""] resources: ["pods","services","replicationcontrollers","persistentvolumeclaims","persistentvolumes"] verbs: ["watch","list","get"] - apiGroups: ["extensions"] resources: ["replicasets","daemonsets"] verbs: ["watch","list","get"] - apiGroups: ["policy"] resources: ["poddisruptionbudgets"] verbs: ["watch","list"] - apiGroups: ["apps"] resources: ["statefulsets"] verbs: ["watch","list","get"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["watch","list","get"] apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: cluster-autoscaler namespace: kube-system labels: k8s-addon: cluster-autoscaler.addons.k8s.io k8s-app: cluster-autoscaler rules: - apiGroups: [""] resources: ["configmaps"] verbs: ["create","list","watch"] - apiGroups: [""] resources: ["configmaps"] resourceNames: ["cluster-autoscaler-status", "cluster-autoscaler-priority-expander"] verbs: ["delete","get","update","watch"] apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: cluster-autoscaler labels: k8s-addon: cluster-autoscaler.addons.k8s.io k8s-app: cluster-autoscaler roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-autoscaler subjects: - kind: ServiceAccount name: cluster-autoscaler namespace: kube-system apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: cluster-autoscaler namespace: kube-system labels: k8s-addon: cluster-autoscaler.addons.k8s.io k8s-app: cluster-autoscaler roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: cluster-autoscaler subjects: - kind: ServiceAccount name: cluster-autoscaler namespace: kube-system apiVersion: v1 kind: Secret metadata: name: cloud-config namespace: kube-system type: Opaque data: access-key-id: "TFRBSWlCSFJyeHd2********" access-key-secret: "bGIyQ3NuejFQOWM0WjFUNjR4WTVQZzVP*********" region-id: "Y24taHVoZW********" apiVersion: apps/v1 kind: Deployment metadata: name: cluster-autoscaler namespace: kube-system labels: app: cluster-autoscaler spec: replicas: 1 selector: matchLabels: app: cluster-autoscaler template: metadata: labels: app: cluster-autoscaler spec: dnsConfig: nameservers: - 100.XXX.XXX.XXX - 100.XXX.XXX.XXX nodeSelector: ca-key: ca-value priorityClassName: system-cluster-critical serviceAccountName: admin containers: - image: 192.XXX.XXX.XXX:XX/admin/autoscaler:v1.3.1-7369cf1 name: cluster-autoscaler resources: limits: cpu: 100m memory: 300Mi requests: cpu: 100m memory: 300Mi command: - ./cluster-autoscaler - '--v=5' - '--stderrthreshold=info' - '--cloud-provider=alicloud' - '--scan-interval=30s' - '--scale-down-delay-after-add=8m' - '--scale-down-delay-after-failure=1m' - '--scale-down-unready-time=1m' - '--ok-total-unready-count=1000' - '--max-empty-bulk-delete=50' - '--expander=least-waste' - '--leader-elect=false' - '--scale-down-unneeded-time=8m' - '--scale-down-utilization-threshold=0.2' - '--scale-down-gpu-utilization-threshold=0.3' - '--skip-nodes-with-local-storage=false' - '--nodes=0:5:asg-hp3fbu2zeu9bg3clraqj' imagePullPolicy: "Always" env: - name: ACCESS_KEY_ID valueFrom: secretKeyRef: name: cloud-config key: access-key-id - name: ACCESS_KEY_SECRET valueFrom: secretKeyRef: name: cloud-config key: access-key-secret - name: REGION_ID valueFrom: secretKeyRef: name: cloud-config key: region-id5. 执行结果

启动busybox镜像,在pod内执行如下命令访问以上应用的service,可以同时启动多个pod增加业务负载。while true;do wget -q -O- http://hpa-test/index.html;done

观察HPA:加压前

图 5:加压前

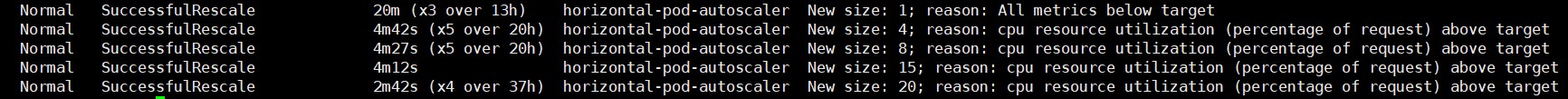

加压后

当CPU值达到阈值后,会触发pod的水平扩容。

图 6:加压后1

图 7:加压后2

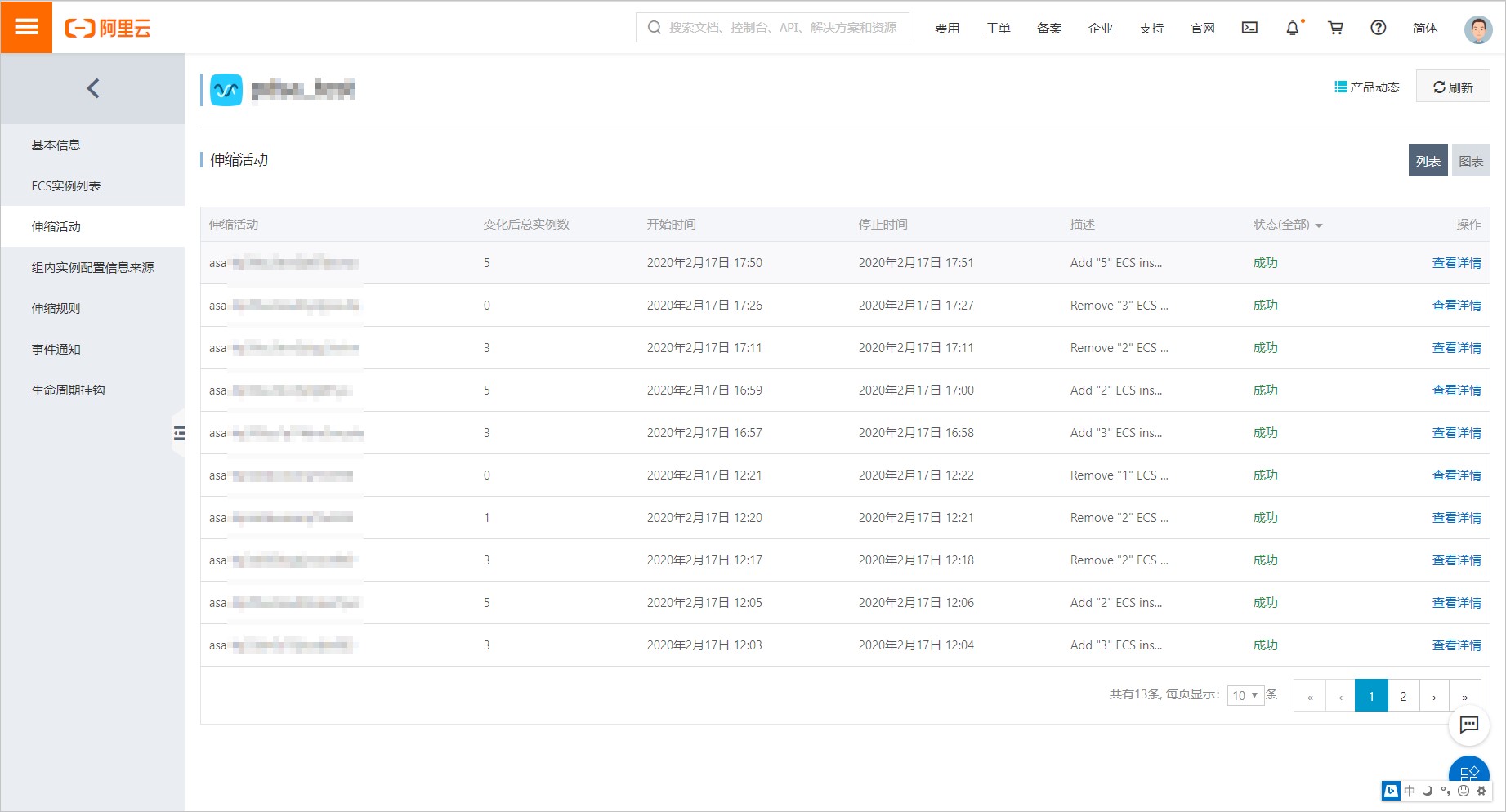

当集群资源不足时,新扩容出的pod处于pending状态,此时将触发cluster autoscaler,自动扩容节点。

图8:伸缩活动

我们是阿里云智能全球技术服务-SRE团队,我们致力成为一个以技术为基础、面向服务、保障业务系统高可用的工程师团队;提供专业、体系化的SRE服务,帮助广大客户更好地使用云、基于云构建更加稳定可靠的业务系统,提升业务稳定性。我们期望能够分享更多帮助企业客户上云、用好云,让客户云上业务运行更加稳定可靠的技术,您可用钉钉扫描下方二维码,加入阿里云SRE技术学院钉钉圈子,和更多云上人交流关于云平台的那些事。

流媒体服务器测试直播功能,您可以使用Adobe公司提供的免费软件Adobe Flash Medi...

一、什么是递归函数? 在函数内部,可以调用其他函数。如果一个函数在内部调用自...

常见的数据结构与算法 最基本的数据结构和算法包括: 排序算法 递归 链表 数组 ...

企业需要使用正确的数据分析工具来控制其业务支出。在保持精益运营的过程中,很...

人工智能相册分类接口 免费套餐试用 现在网络产品比较多,相册、网盘等等,手机...

就像其他领域一样,2020年颠覆了数据世界。当COVID关闭企业并派遣员工在家工作时...

近年来,伴随着人工智能、物联网、云计算等的不断发展,作为基础食粮的大数据也...

客户简介 芒果TV是湖南广播电视台旗下唯一互联网视频平台,独家提供湖南卫视所有...

问题描述 网站的访问与云服务器的网络配置、端口通信、防火墙配置、安全组配置等...

【51CTO.com原创稿件】自 1972 年以来,SAP 已经彻底革新了商业运营模式。SAP携...