本文档介绍文件存储HDFS和对象存储OSS之间的数据迁移操作过程。您可以将文件存储HDFS数据迁移到对象存储OSS,也可以将对象存储OSS的数据迁移到文件存储HDFS。

背景信息

阿里云文件存储HDFS是面向阿里云ECS实例及容器服务等计算资源的文件存储服务。文件存储HDFS允许您就像在Hadoop的分布式文件系统中一样管理和访问数据,让您拥有高性能的热数据访问能力。对象存储OSS是海量、安全、低成本、高可靠的云存储服务,提供标准型、归档型等多种存储类型。您可以通过文件存储HDFS和对象存储OSS之间双向数据迁移,从而为热、温、冷数据合理分层,不但实现对热数据的高性能访问,更能有效控制存储成本。

准备工作

- 开通文件存储HDFS服务并创建文件系统实例和挂载点,详情请参见快速入门。

- 搭建完成Hadoop集群。建议您使用的Hadoop版本不低于2.7.2,本文档中使用的Hadoop版本为Apache Hadoop 2.7.2。

- 在Hadoop集群所有节点上安装JDK。本操作要求JDK版本不低于1.8。

- 在Hadoop集群中配置文件存储HDFS实例,详情请参见挂载文件系统。

- 在Hadoop集群安装OSS客户端JindoFS SDK。JindoFS SDK详细介绍请参见JindoFS SDK。

- 下载jindofs-sdk.jar。

cp ./jindofs-sdk-*.jar ${HADOOP_HOME}/share/hadoop/hdfs/lib/jindofs-sdk.jar

- 为Hadoop集群所有节点创建JindoFS SDK配置文件。

- 添加如下环境变量到/etc/profile文件。

export B2SDK_CONF_DIR=/etc/jindofs-sdk-conf

- 创建OSS存储工具配置文件/etc/jindofs-sdk-conf/bigboot.cfg。

[bigboot]

logger.dir=/tmp/bigboot-log[bigboot-client]

client.oss.retry=5

client.oss.upload.threads=4

client.oss.upload.queue.size=5

client.oss.upload.max.parallelism=16

client.oss.timeout.millisecond=30000

client.oss.connection.timeout.millisecond=3000

- 加载环境变量使之生效。

source /etc/profile

- 在Hadoop集群使用OSS客户端。

hadoop fs -ls oss://<accessKeyId>:<accessKeySecret>@<bucket-name>.<endpoint>/

将文件存储HDFS数据迁移到对象存储OSS

- 准备测试数据。本文文件存储HDFS待迁移测试数据100 GB。

- 启动Hadoop MapReduce任务(DistCp)将测试数据迁移至对象存储OSS。

./hadoop-2.7.2/bin/hadoop distcp \

dfs://f-xxxxxxxxxxx.cn-shanghai.dfs.aliyuncs.com:10290/dfs2oss/data/data_100g/ \

oss://<accessKeyId>:<accessKeySecret>@<bucket-name>.<endpoint>/data_100g

参数说明如下表所示。

| 参数 |

说明 |

| accessKeyId |

访问对象存储OSS API的密钥。获取方式请参见创建AccessKey。

|

| accessKeySecret |

| bucket-name.endpoint |

对象存储OSS的访问域名,包括存储空间(Bucket)名称和对应的地域域名(Endpoint)地址。 |

- 任务执行完成后,查看迁移结果。

如果回显包含如下类似信息,说明迁移成功。

20/07/27 14:10:09 INFO mapreduce.Job: Job job_1595829170826_0002 completed successfully

20/07/27 14:10:09 INFO mapreduce.Job: Counters: 38

File System Counters

DFS: Number of bytes read=107374209810

DFS: Number of bytes written=0

DFS: Number of read operations=427

DFS: Number of large read operations=0

DFS: Number of write operations=42

FILE: Number of bytes read=0

FILE: Number of bytes written=2549873

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

OSS: Number of bytes read=0

OSS: Number of bytes written=215586406400

OSS: Number of read operations=0

OSS: Number of large read operations=0

OSS: Number of write operations=13209500

Job Counters

Launched map tasks=21

Other local map tasks=21

Total time spent by all maps in occupied slots (ms)=7459391

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=7459391

Total vcore-milliseconds taken by all map tasks=7459391

Total megabyte-milliseconds taken by all map tasks=7638416384

Map-Reduce Framework

Map input records=101

Map output records=0

Input split bytes=2814

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=12483

CPU time spent (ms)=912380

Physical memory (bytes) snapshot=15281192960

Virtual memory (bytes) snapshot=79722381312

Total committed heap usage (bytes)=8254390272

File Input Format Counters

Bytes Read=24596

File Output Format Counters

Bytes Written=0

org.apache.hadoop.tools.mapred.CopyMapper$Counter

BYTESCOPIED=107374182400

BYTESEXPECTED=107374182400

COPY=101

20/07/27 14:10:09 INFO common.AbstractJindoFileSystem: Read total statistics: oss read average -1 us, cache read average -1 us, read oss percent 0%

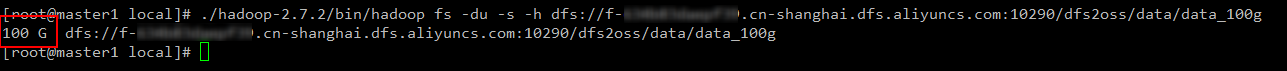

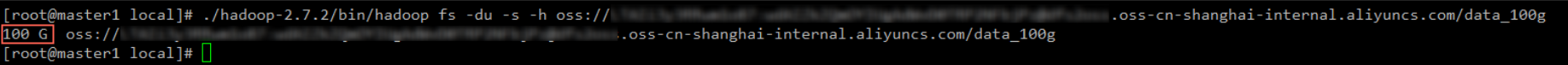

- 验证迁移结果。

查看迁移到对象存储OSS的测试数据大小。

[root@master1 local]# ./hadoop-2.7.2/bin/hadoop fs -du -s -h oss://<accessKeyId>:<accessKeySecret>@<bucket-name>.oss-cn-shanghai-internal.aliyuncs.com/data_100g

100 G oss://<accessKeyId>:<accessKeySecret>@<bucket-name>.<endpoint>/data_100g

将对象存储OSS数据迁移到文件存储HDFS

- 准备测试数据。本文对象存储待迁移测试数据为100 GB。

- 启动Hadoop MapReduce任务(DistCp)将测试数据迁移至文件存储HDFS。

./hadoop-2.7.2/bin/hadoop distcp \

oss://<accessKeyId>:<accessKeySecret>@<bucket-name>.<endpoint>/data_100g \

dfs://f-xxxxxxxxxxx.cn-shanghai.dfs.aliyuncs.com:10290/oss2dfs/data/data_100g/

- 任务执行完成后,查看迁移结果。

如果回显包含如下类似信息,说明迁移成功。

20/07/27 14:51:38 INFO mapreduce.Job: Job job_1595829170826_0003 completed successfully

20/07/27 14:51:38 INFO mapreduce.Job: Counters: 38

File System Counters

DFS: Number of bytes read=30800

DFS: Number of bytes written=107374182400

DFS: Number of read operations=761

DFS: Number of large read operations=0

DFS: Number of write operations=241

FILE: Number of bytes read=0

FILE: Number of bytes written=2427170

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

OSS: Number of bytes read=0

OSS: Number of bytes written=0

OSS: Number of read operations=0

OSS: Number of large read operations=0

OSS: Number of write operations=0

Job Counters

Launched map tasks=20

Other local map tasks=20

Total time spent by all maps in occupied slots (ms)=3384423

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=3384423

Total vcore-milliseconds taken by all map tasks=3384423

Total megabyte-milliseconds taken by all map tasks=3465649152

Map-Reduce Framework

Map input records=101

Map output records=0

Input split bytes=2660

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=22358

CPU time spent (ms)=1879870

Physical memory (bytes) snapshot=14572785664

Virtual memory (bytes) snapshot=82449666048

Total committed heap usage (bytes)=12528386048

File Input Format Counters

Bytes Read=28140

File Output Format Counters

Bytes Written=0

org.apache.hadoop.tools.mapred.CopyMapper$Counter

BYTESCOPIED=107374182400

BYTESEXPECTED=107374182400

COPY=101

20/07/27 14:51:38 INFO common.AbstractJindoFileSystem: Read total statistics: oss read average -1 us, cache read average -1 us, read oss percent 0%

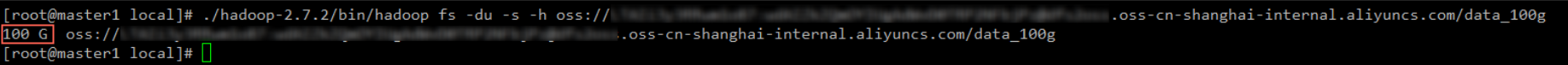

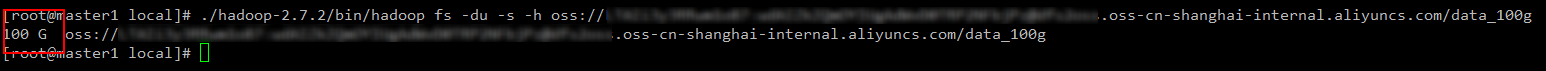

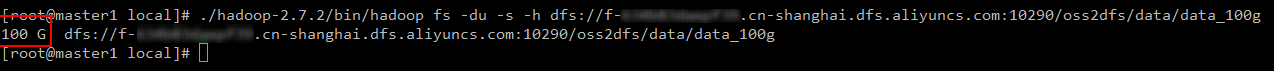

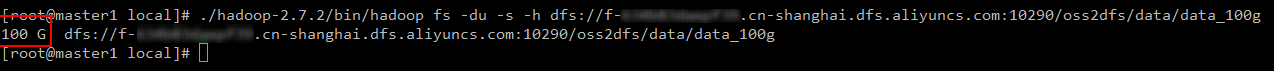

- 验证迁移结果。

查看迁移到文件存储HDFS的测试数据大小。

[root@master1 local]# ./hadoop-2.7.2/bin/hadoop fs -du -s -h dfs://f-xxxxxxxxxxx.cn-shanghai.dfs.aliyuncs.com:10290/oss2dfs/data/data_100g

100 G dfs://f-xxxxxxxxxxx.cn-shanghai.dfs.aliyuncs.com:10290/oss2dfs/data/data_100g

常见问题

对于正在写入的文件,进行迁移时会遗漏最新写入的数据吗?

Hadoop兼容文件系统提供单写者多读者并发语义,针对同一个文件,同一时刻可以有一个写者写入和多个读者读出。以文件存储HDFS到对象存储OSS的数据迁移为例,数据迁移任务打开文件存储HDFS的文件F,根据当前系统状态决定文件F的长度L,将L字节迁移到对象存储OSS。如果在数据迁移过程中,有并发的写者写入,文件F的长度将超过L,但是数据迁移任务无法感知到最新写入的数据。因此,建议当您在做数据迁移时,请避免往迁移的文件中写入数据。